Dynamatic

Dynamatic is an academic, open-source high-level synthesis compiler that produces synchronous dynamically-scheduled circuits from C/C++ code. Dynamatic generates synthesizable RTL which currently targets Xilinx FPGAs and delivers significant performance improvements compared to state-of-the-art commercial HLS tools in specific situations (e.g., applications with irregular memory accesses or control-dominated code). The fully automated compilation flow of Dynamatic is based on MLIR. It is customizable and extensible to target different hardware platforms and easy to use with commercial tools such as Vivado (Xilinx) and Modelsim (Mentor Graphics).

We welcome contributions and feedback from the community. If you would like to participate, please check out our contribution guidelines

Using Dynamatic

To get started using Dynamatic (after setting it up), check out our introductory tutorial, which guides you through your first compilation of C code into a synthesizable dataflow circuit! If you want to start modifying Dynamatic and are new to MLIR or compilers in general, our MLIR primer and pass creation tutorial will help you take your first steps.

Setting up Dynamatic

There are currently two ways to setup and use Dynamatic

1. Build From Source (Recommended)

We support building from source on Linux and on Windows (through WSL). See our Build instructions below. Ubuntu 24.04 LTS is officially supported; other apt-based distributions should work as well. Other distributions may also require cosmetic changes to the dependencies you have to install before running Dynamatic.

2. Use the Provided Virtual Machine

We provide an Ubuntu-based Virtual Machine (VM) that already has Dynamatic and our dataflow circuit visualizer set up. You can use it to simply follow the tutorial (Using Dynamatic) or as a starting point to use/modify Dynamatic in general.

Build Instructions

The following instructions can be used to setup Dynamatic from source.

note

If you intend to modify Dynamatic’s source code and/or build the interactive dataflow circuit visualizer (recommended for circuit debugging), you can check our advanced build instructions to learn how to customize the build process to your needs.

1. Install Dependencies Required by the Project

Most of our dependencies are provided as standard packages on most Linux distributions. Dynamatic needs a working C/C++ toolchain (compiler, linker), cmake and ninja for building the project, Python (3.6 or newer), GraphViz to work with .dot files, and standard command-line tools like git.

On apt-based Linux distributions:

apt-get update

apt-get install clang lld ccache cmake ninja-build python3 graphviz git curl gzip libreadline-dev libboost-all-dev

Note that you may need super user privileges for any package installation. You can use sudo before entering the commands

clang, lld, and ccache are not strictly required but significantly speed up (re)builds. If you do not wish to install them, call the build script with the –disable-build-opt flag to prevent their usage.

Dynamatic utilizes Gurobi to optimize the circuit’s performance. It is optional and Dynamatic will build properly without it but is useful for more optimized results. Refer to our Advanced Build page for guidance on how to setup the Gurobi solver.

tip

While this section helps you install the dependencies needed to get started with Dynamatic, you can find a list of dependencies used by Dynamatic in the dependencies section for a better understanding of how the tool works.

Finally, Dynamatic uses Modelsim or Questa to run simulations.

These are optional tools which you can see how to install in the Advanced Build page if you intend to use the simulator.

tip

Before moving on to the next step, refresh your environment variables in your current terminal to make sure that all newly installed tools are visible in your PATH. Alternatively, open a new terminal and proceed to cloning the project.

2. Cloning the Project and Its Submodules

Dynamatic depends on a fork of LLVM/MLIR. To instruct git to clone the appropriate versions submodules used by Dynamatic, we enable the --recurse-submodules flag.

git clone --recurse-submodules https://github.com/EPFL-LAP/dynamatic.git

This creates a dynamatic folder in your current working directory.

3. Build the Project

Run the build script from the directory created by the clone command (see the advanced build instructions for details on how to customize the build process).

cd dynamatic

chmod +x ./build.sh

./build.sh --release

Using prebuilt LLVM. The commands above might take quite some time to finish since we need to build LLVM. Alternatively, the build.sh script can download a prebuilt LLVM and link Dynamatic against that instead. If this is preferred, you could run the following command:

./build.sh --release --use-prebuilt-llvm

note

You need at least 6GB of free disk space for Dynamatic (if you enable the --use-prebuilt-llvm option).

If you need to build llvm-project from scratch, you will need at least 50GB

of free disk space and 16GB+ of RAM.

4. Run the Dynamatic Testsuite

To confirm that you have successfully compiled Dynamatic and to test its functionality, you can run Dynamatic’s testsuite from the top-level build folder using ninja.

# From the "dynamatic" folder created by the clone command

cd build

ninja check-dynamatic

You can now launch the Dynamatic front-end from Dynamatic’s top level directory using:

./bin/dynamatic

With Dynamatic correctly installed, you can browse the using dynamatic tutorial to learn how to use the basic commands and features in Dynamatic to convert your C code into RTL.

You can also explore the Advanced build options.

Tutorials

Welcome to the Dynamatic tutorials!

To encourage contributions to the project, we aim to support newcomers to the worlds of software development and compilers by providing development tutorials that can help them take their first steps inside the codebase. They are mostly aimed at people who have no or little compiler development experience, especially with the MLIR compiler infrastructure with which Dynamatic is deeply intertwined. Some prior knowledge of C++ (more generally, of object-oriented programming) and of the theory behind dataflow circuits is assumed.

Introduction to Dynamatic

This two-part tutorial first introduces the toolchain and teaches you to use the Dynamatic frontend to synthesize, simulate, and visualize dataflow circuits compiled from C code. The second part guides you through the creation of a small compiler optimization pass and gives you some insight into how the toolchain can help you identify issues in your circuits. This tutorial is a good starting point for anyone wanting to get into Dynamatic, without necessarily modifying it.

The MLIR Primer

This tutorial, heavily based on MLIR’s official language reference, is meant as a quick introduction to MLIR and its core constructs. C++ code snippets are peperred through the tutorial in an attempt to ease newcomers to the framework’s C++ API and provide some initial code guidance.

Creating Compiler Passes

This tutorial goes through the creation of a simple compiler transformation pass that operates on Handshake-level IR (i.e., on dataflow circuits modeled in MLIR). It goes into details into all the code that one needs to write to declare a pass in the codebase, implement it, and then run it on some input code using the dynamatic-opt tool. It then touches on different ways to write the same pass as to give an idea of MLIR’s code transformation capabilities.

Introduction to Dynamatic

This tutorial is meant as the entry-point for new Dynamatic users and will guide you through your first interactions with the compiler and its surrounding toolchain. Following it requires that you have Dynamatic built locally on your machine, either from source or using our custom virtual machine (VM setup instructions).

warning

Note that the virtual machine does not contain an MILP solver; when using frontend scripts, you will have to provide the --simple-buffers flag to the compile command to instruct it to not rely on an MILP solver for buffer placement. Unfortunately, this will affect the circuits you generate as part of the exercises and you may therefore obtain different results from what the tutorial describes.

It is divided in the following two chapters.

- Chapter #1 - Using Dynamatic | We use Dynamatic’s frontend to synthesize our first dataflow circuit from C code, then visualize it using our interactive dataflow visualizer.

- Chapter #2 - Modifying Dynamatic | We write a small compiler transformation pass in C++ to try to improve circuit performance and decrease area, then debug it using the visualizer.

Running an Integration Test

1. Binary Search

This example describes how to use Dynamatic and become more familiarized with its HLS flow. You will see how:

- compile your C code to RTL

- simulate the resulting circuit using ModelSim

- synthesize your circuit using vivado

- visualize your circuit

Source Code

//===- binary_search.c - Search for integer in array -------------*- C -*-===//

//

// Implements the binary_search kernel.

//

//===----------------------------------------------------------------------===//

#include "binary_search.h"

#include "dynamatic/Integration.h"

int binary_search(in_int_t search, in_int_t a[N]) {

int evenIdx = -1;

int oddIdx = -1;

for (unsigned i = 0; i < N; i += 2) {

if (a[i] == search) {

evenIdx = (int)i;

break;

}

}

for (unsigned i = 1; i < N; i += 2) {

if (a[i] == search) {

oddIdx = (int)i;

break;

}

}

int done = -1;

if (evenIdx != -1)

done = evenIdx;

else if (oddIdx != -1)

done = oddIdx;

return done;

}

int main(void) {

in_int_t search = 55;

in_int_t a[N];

for (int i = 0; i < N; i++)

a[i] = i;

CALL_KERNEL(binary_search, search, a);

return 0;

}

This HLS code includes control flow inside loops, limiting pipelining in statically scheduled HLS due to worst-case assumptions—here, the branch is taken and the loop exits early. Dynamically scheduled HLS, like Dynamatic, adapts to runtime behavior. Let’s see how the generated circuit handles control flow more flexibly.

Launching Dynamatic

If you haven’t added Dynamatic to path, navigate to the directory where you cloned Dynamatic and run the command below:

./bin/dynamatic

The Dynamatic frontend would be displayed as follows

username:~/Dynamatic/dynamatic$ ./bin/dynamatic

================================================================================

============== Dynamatic | Dynamic High-Level Synthesis Compiler ===============

======================== EPFL-LAP - v2.0.0 | March 2024 ========================

================================================================================

dynamatic>

Set the Path to the C Target C File

Use the set-src command to direct Dynamatic to the file you want to synthesize into RTL

dynamatic> set-src integration-test/binary_search/binary_search.c

Compile the C File to a Lower Intermediate Representation

You can choose the buffer placement algorithm with the --buffer-algorithm flag. For this example, we use fpga20, a throughput driven algorithm which requires Gurobi installed as describe in the Advanced Build page.

tip

If you are not sure which options are available for the compile command, add anything after it and hit enter to see the options e.g compile –

dynamatic> compile --buffer-algorithm fpga20

[INFO] Compiled source to affine

[INFO] Ran memory analysis

[INFO] Compiled affine to scf

[INFO] Compiled scf to cf

[INFO] Applied standard transformations to cf

[INFO] Applied Dynamatic transformations to cf

[INFO] Compiled cf to handshake

[INFO] Applied transformations to handshake

[INFO] Built kernel for profiling

[INFO] Ran kernel for profiling

[INFO] Profiled cf-level

[INFO] Running smart buffer placement with CP = 4.000 and algorithm = 'fpga20'

[INFO] Placed smart buffers

[INFO] Canonicalized handshake

[INFO] Created binary_search DOT

[INFO] Converted binary_search DOT to PNG

[INFO] Created binary_search_CFG DOT

[INFO] Converted binary_search_CFG DOT to PNG

[INFO] Lowered to HW

[INFO] Compilation succeeded

tip

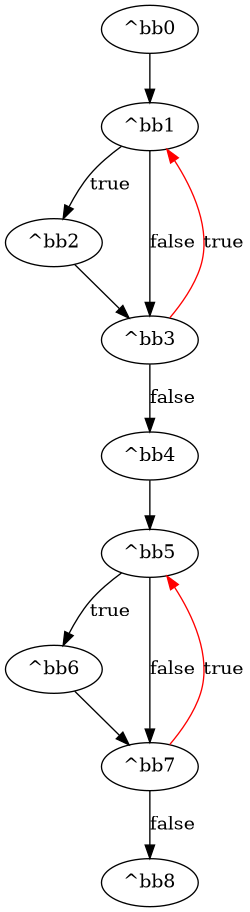

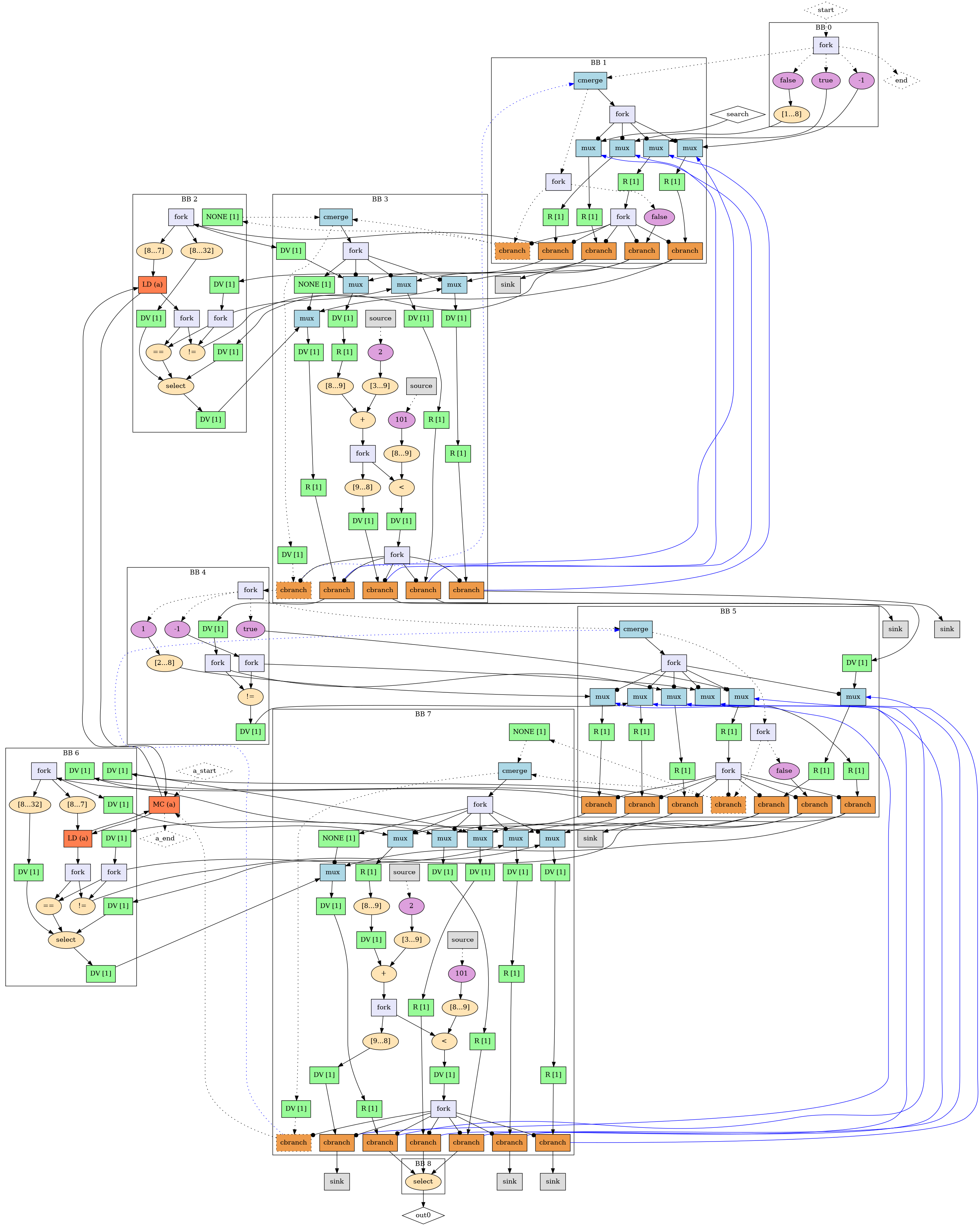

Two PNG files are generated at compile time, kernel_name.png and kernel_name_CFG.png, allowing you to have a preview of your circuit and its control flow graph generated by Dynamatic as shown below.

Binary Search CFG

Binary Search Dataflow Circuit

Generate HDL from mlir File

An mlir file is generated during the compile process.

write-hdl converts it into HDL code for your kernel. The default HDL is VHDL. You can choose verilog or vhdl with the --hdl flag

dynamatic> write-hdl --hdl vhdl

[INFO] Exported RTL (vhdl)

[INFO] HDL generation succeeded

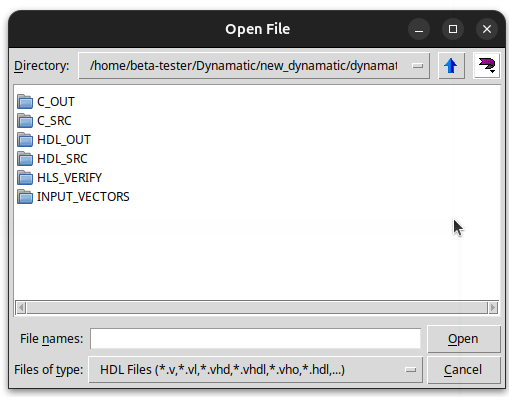

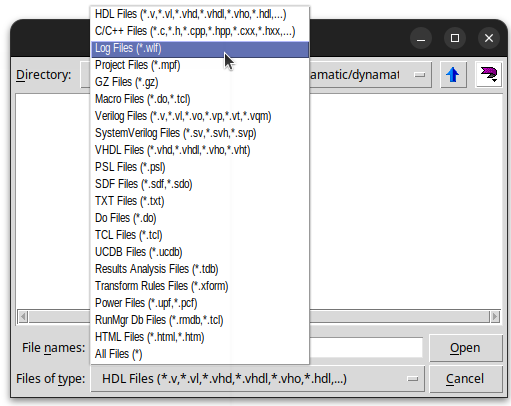

Simulate Your Circuit

This step simulates the kernel in C and HDL (using modelsim) and compares the results for equality.

dynamatic> simulate

[INFO] Built kernel for IO gen.

[INFO] Ran kernel for IO gen.

[INFO] Launching Modelsim simulation

[INFO] Simulation succeeded

Sythesize With Vivado

This step is optional. It allows to get more timing and performance related files using vivado. You must have vivado installed.

dynamatic> synthesize

[INFO] Created synthesis scripts

[INFO] Launching Vivado synthesis

[INFO] Logic synthesis succeeded

note

If this step fails despite you having vivado installed and added to path, source the vivado/vitis settings64.sh in your shell and try again.

warning

Adding the sourcing of the settings64.sh to path may hinder future compilations as the vivado compiler varies from the regular clang compiler on your machine

Visualize and Simulate Your Circuit

By running the visualize command, the Godot GUI will be launched with your dataflow circuit open, and ready to be played with

dynamatic> visualize

[INFO] Generated channel changes

[INFO] Added positioning info. to DOT

[INFO] Launching visualizer...

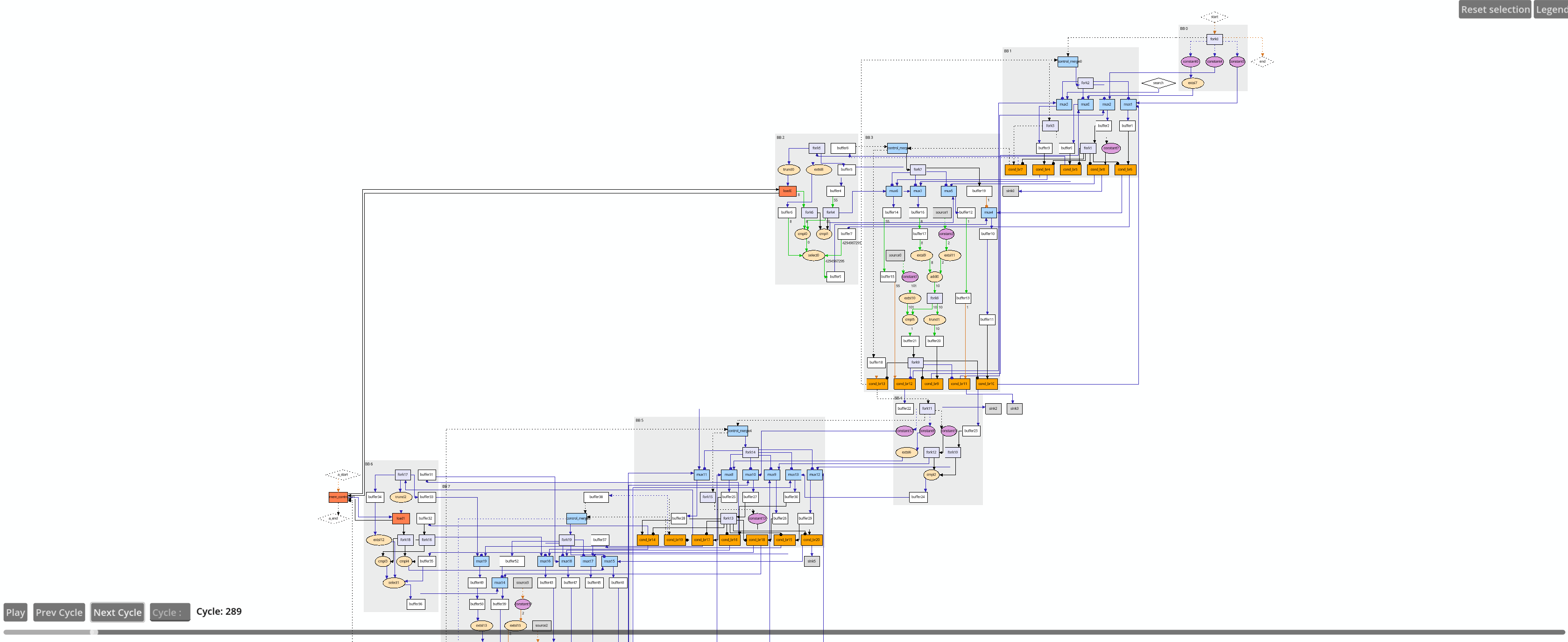

Below is a preview of the circuit in the Godot visualizer

The circuit is too broad to capture in one image but you can move around the preview by clicking, holding, and moving your cursor around. Play with the commands to see your circuit in action.

The circuit is too broad to capture in one image but you can move around the preview by clicking, holding, and moving your cursor around. Play with the commands to see your circuit in action.

Modifying Dynamatic

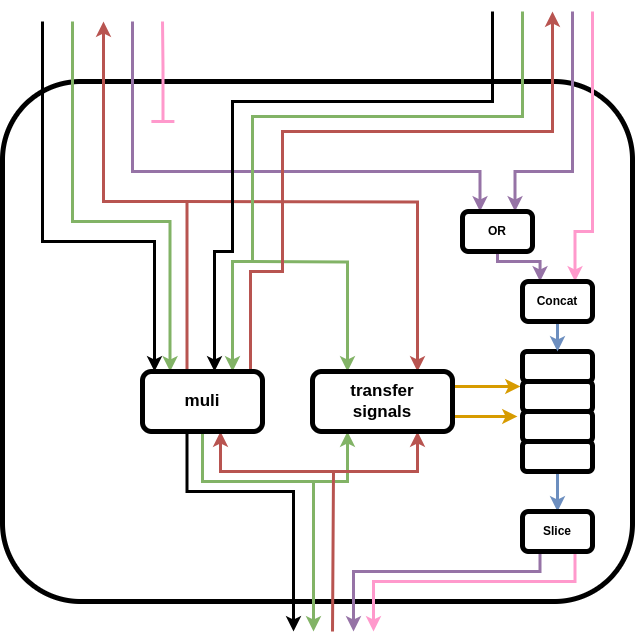

This tutorial logically follows the Using Dynamatic tutorial, and as such requires that you are already familiar with the concepts touched on in the latter. In this tutorial, we will write a small compiler optimization pass in C++ that will transform dataflow muxes into merges in an attempt to optimize our circuits’ area and throughput. While we will write a little bit of C++ in this tutorial, it does not require much knowledge in the language.

Below are some technical details about this tutorial.

- All resources are located in the repository’s

tutorials/Introduction/folder. Data exclusive to this chapter is located in theCh2subfolder, but we will also reuse data from the previous chapter,Ch1. - All relative paths mentionned throughout the tutorial are assumed to start at Dynamatic’s top-level folder.

- We assume that you have already built Dynamatic from source using the instructions in the Installing Dynamatic page or that you have access to a Docker container that has a pre-built version of Dynamatic .

This tutorial is divided into the following sections.

- Spotting an Optimization Opportunity | We take another look at the circuit from the previous tutorial and spot something that looks optimizable.

- Writing a Small Compiler Pass | We implement the optimization as a compiler pass, and add it the compilation script to use it.

- Testing Our Pass | We test our pass to make sure it works as intended, and find out that it may not.

- A problem, and a Solution! | After identifying a problem in one of our circuits, we implement a quick-and-dirty fix to make the circuit correct again.

- Conclusion | We reflect on everything we just accomplished.

Spotting an Optimization Opportunity

Let’s start by re-considering the same loop_multiply kernel (Ch1/loop_multiply.c) from the previous tutorial. See its definition below.

// The kernel under consideration

unsigned loop_multiply(in_int_t a[N]) {

unsigned x = 2;

for (unsigned i = 0; i < N; ++i) {

if (a[i] == 0)

x = x * x;

}

return x;

}

This simple kernel multiplies a number by itself at each iteration of a simple loop from 0 to any number N where the corresponding element of an array equals 0. The function returns the calculated value after the loop exits.

If you have deleted the data generated by the synthesis flow on this kernel, you can regenerate it fully using the loop-multiply.dyn frontend script (Ch2/loop-multiply.dyn) that has already been written for you. Just run the following command from Dynamatic’s top-level folder.

./bin/dynamatic --run tutorials/Introduction/Ch2/loop-multiply.dyn

This will compile the C kernel, functionally verify the generated VHDL, and re-open the dataflow visualizer. Note the [INFO] Simulation succeeded message in the output (after the simulate command), indicating that outputs of the VHDL design matched those of the original C kernel. All output files are generated in tutorials/Introduction/usingDynamatic/out.

tip

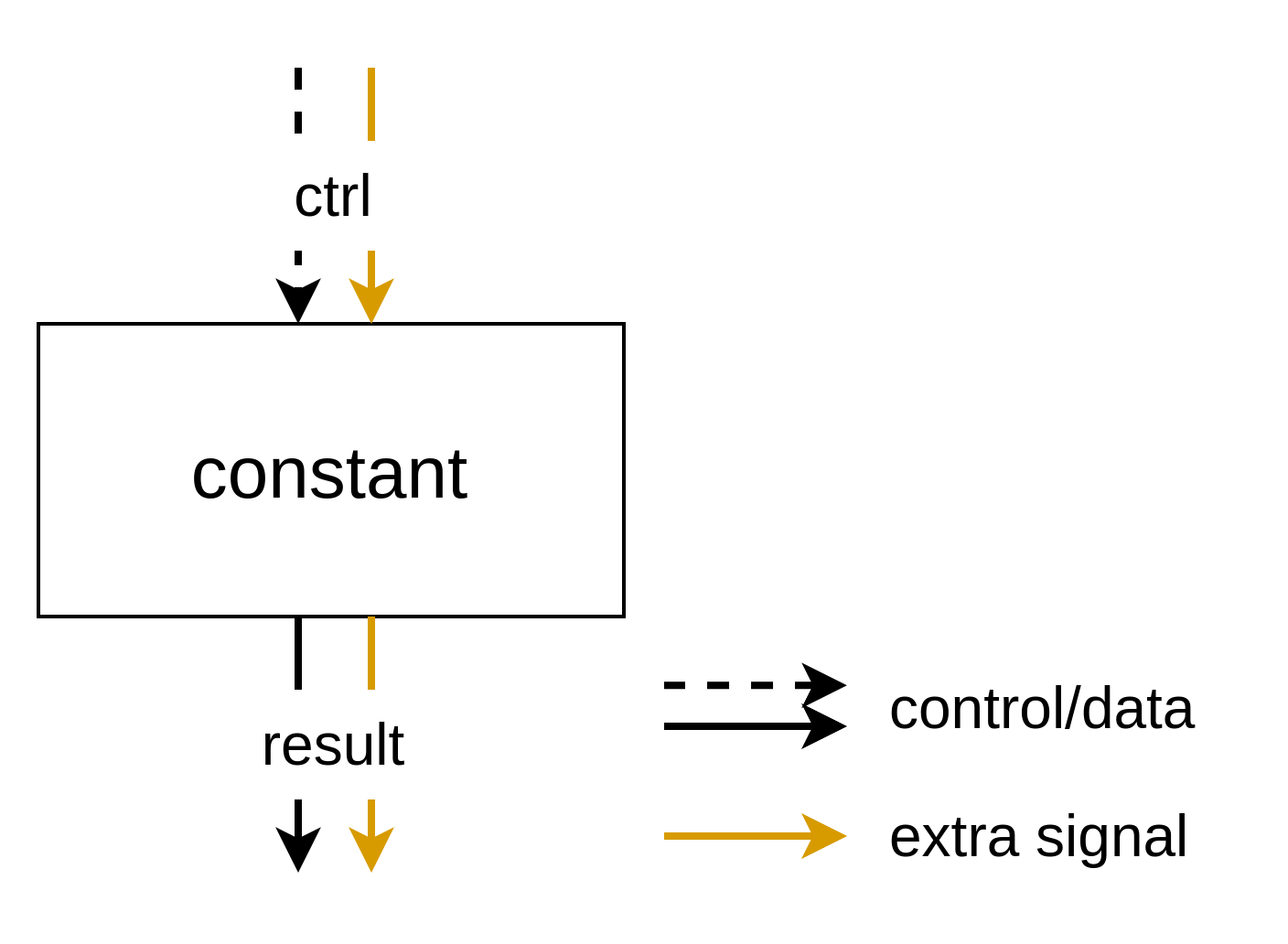

Identify all muxes in the circuit and derive their purpose in this circuit. Remember that muxes have an arbitrary number of data inputs (here it is always 2) and one select input, which selects which valid data input gets forwarded to the output. Note that, in general, the select input of muxes if generated by the index output of the same block’s control merge.

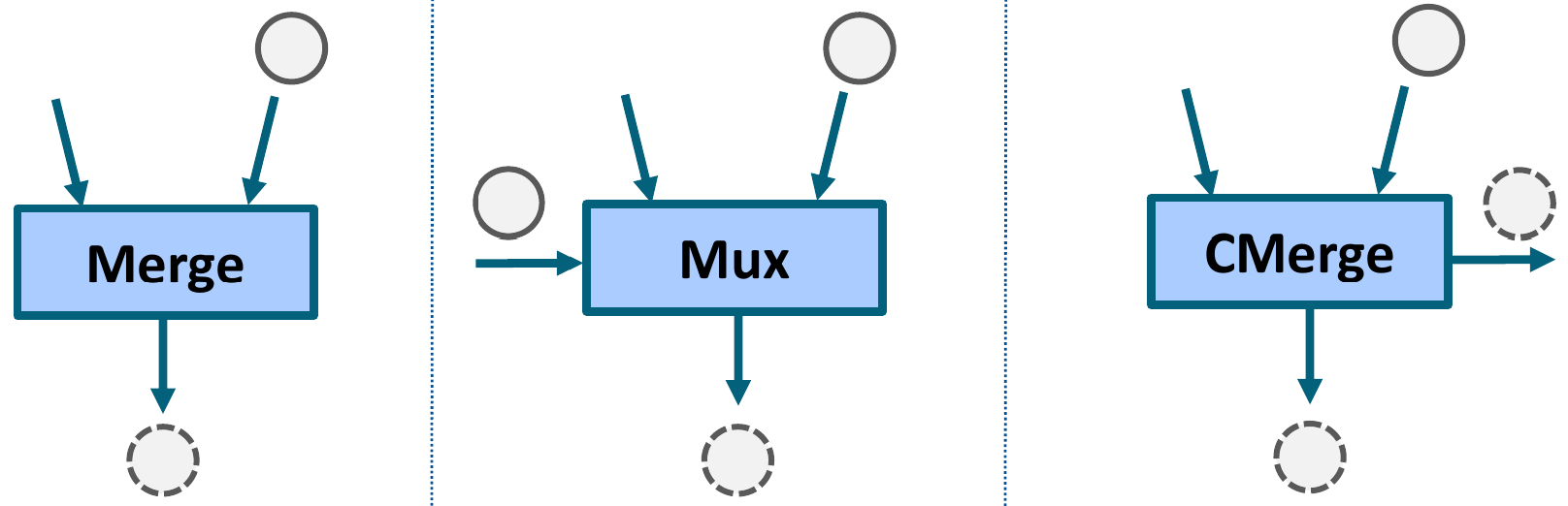

Another dataflow component that is similar to the mux in purpose is the merge. Identically to the mux, the merge has an arbitrary number of data inputs, one of which gets forwarded to the output when it is valid. However, the two dataflow components have two key differences.

- The merge does not have a

selectinput. Instead, at any given cycle, if any of its data input is valid and if its data output is ready, it will transfer a token to the output. - The merge does not provide any guarantee on input consumption order if at any given cycle multiple of its inputs are valid and its data output if ready. In those situations, it will simply transfer one of its input tokens to its output.

Due to this “simpler” interface, a merge is generally smaller in area than a corresponding mux with the same number of data inputs. Replacing a mux with a merge may also speed up circuit execution since the merge does not have to wait for the arrival of a valid select token to transfer one of its data inputs to its output.

Let’s try to make this circuit smaller by writing a compiler pass that will automatically replace all muxes with equivalent merges then!

Writing a Small Compiler Pass

In this section, we will add a small transformation pass that achieves the optimization opportunity we identified in the previous section. We will not go into much details into how C++ or MLIR works, our focus will be instead in writing something minimal that accomplishes the job cleanly. For a more complete tutorial on pass-writing, feel free to go through the “Creating Passes” tutorial after completing this one.

Creating this pass will involve creating 2 new source files and making minor editions to 3 existing source files. In order, we will

- Declare the Pass in TableGen (a LLVM/MLIR language that eventually transpiles to C++).

- Write a Minimal C++ Header for the Pass.

- Implement the Pass in C++.

- Make New Source File We Created Part of Dynamatic’s Build Process.

- Edit a Generic Header to Make Our Pass Visible to Dynamatic’s Optimizer.

Declaring the Pass in TableGen

The first thing we need to do is declare our pass somewhere. In LLVM/MLIR, this happens in the TableGen language, a declarative format that ultimately transpiles to C++ during the build process to automatically generate a lot of boilerplate C++ code.

Open the include/dynamatic/Transforms/Passes.td file and copy-and-paste the following snippet anywhere below the include lines at the top of the file.

def HandshakeMuxToMerge : DynamaticPass<"handshake-mux-to-merge"> {

let summary = "Transform all muxes into merges.";

let description = [{

Transform all muxes within the IR into merges with identical data operands.

}];

let constructor = "dynamatic::createHandshakeMuxToMerge()";

}

This declares a compiler pass whose C++ class name will be based on HandshakeMuxToMerge and which can be called using the --handshake-mux-to-merge flag from Dynamatic’s optimizer (we will go into more details into using Dynamatic’s optimizer in the “Testing our pass” section). The summary and description fields are optional but useful to describe the pass’s purpose. Finally, the constructor field indicates the name of a C++ function that should returns an instance of our pass. We will declare and then define this method in the next two subsections.

A Minimal C++ Header for the Pass

We now need to write a small C++ header for our new pass. Each pass has one, and they are for the large part always structured in the same exact way. Create a file in include/dynamatic/Transforms called HandshakeMuxToMerge.h and paste the following chunk of code into it:

/// Classical C-style header guard

#ifndef DYNAMATIC_TRANSFORMS_HANDSHAKEMUXTOMERGE_H

#define DYNAMATIC_TRANSFORMS_HANDSHAKEMUXTOMERGE_H

/// Include some basic headers

#include "dynamatic/Support/DynamaticPass.h"

#include "dynamatic/Support/LLVM.h"

#include "mlir/Pass/Pass.h"

namespace dynamatic {

/// The following include file is autogenerated by LLVM/MLIR during the build

/// process from the Passes.td file we just edited. We only want to include the

/// part of the file that refers to our pass (it contains delcaration code for

/// all transformation passes), which we select using the two macros below.

#define GEN_PASS_DECL_HANDSHAKEMUXTOMERGE

#define GEN_PASS_DEF_HANDSHAKEMUXTOMERGE

#include "dynamatic/Transforms/Passes.h.inc"

/// The pass constructor, with the same name we specified in TableGen in the

/// previous subsection.

std::unique_ptr<dynamatic::DynamaticPass> createHandshakeMuxToMerge();

} // namespace dynamatic

#endif // DYNAMATIC_TRANSFORMS_HANDSHAKEMUXTOMERGE_H

This file does two important things:

- It includes C++ code auto-generated from the

Passes.tdfile we just edited. - It declares the pass header that we announced in the pass’s TableGen declaration.

Now that all declarations are made, it is time to actually implement our IR transformation!

Implementing the Pass

Create a file in lib/Transforms called HandshakeMuxToMerge.cpp and in which we will implement our pass. Paste the following code into it:

/// Include the header we just created.

#include "dynamatic/Transforms/HandshakeMuxToMerge.h"

/// Include some other useful headers.

#include "dynamatic/Dialect/Handshake/HandshakeOps.h"

#include "dynamatic/Support/CFG.h"

#include "mlir/Transforms/GreedyPatternRewriteDriver.h"

using namespace dynamatic;

namespace {

/// Simple driver for the pass that replaces all muxes with merges.

struct HandshakeMuxToMergePass

: public dynamatic::impl::HandshakeMuxToMergeBase<HandshakeMuxToMergePass> {

void runDynamaticPass() override {

// This is the top-level operation in all MLIR files. All the IR is nested

// within it

mlir::ModuleOp mod = getOperation();

MLIRContext *ctx = &getContext();

// Define the set of rewrite patterns we want to apply to the IR

RewritePatternSet patterns(ctx);

// Run a greedy pattern rewriter on the entire IR under the top-level module

// operation

mlir::GreedyRewriteConfig config;

if (failed(applyPatternsAndFoldGreedily(mod, std::move(patterns), config))) {

// If the greedy pattern rewriter fails, the pass must also fail

return signalPassFailure();

}

};

};

}; // namespace

/// Implementation of our pass constructor, which just returns an instance of

/// the `HandshakeMuxToMergePass` struct.

std::unique_ptr<dynamatic::DynamaticPass>

dynamatic::createHandshakeMuxToMerge() {

return std::make_unique<HandshakeMuxToMergePass>();

}

This file, at the botton, implements the pass constructor we declared in the header. This constuctor returns an instance of a struct defined just above—do not mind the slightly convoluted struct declaration, which showcases the curiously recurring template pattern C++ idiom that is used extensively throuhgout MLIR/Dynamatic—whose single method runDynamaticPass defines what happens when the pass is called. In our case, we want to leverage MLIR’s greedy pattern rewriter infrastructure to match on all muxes in the IR and replace them with merges with identical data inputs. If you would like to know more about how greedy pattern rewriting works, feel free to check out MLIR’s official documentation on the subject. For this simple pass, you do not need to understand exactly how it works, just that it can match and try to rewrite certain operations inside the IR based on a set of user-provided rewrite patterns. Speaking of rewrite patterns, let’s add our own to the file just above the HandshakeMuxToMergePass struct definition. Paste the following into the file.

/// Rewrite pattern that will match on all muxes in the IR and replace each of

/// them with a merge taking the same inputs (except the `select` input which

/// merges do not have due to their undeterministic nature).

struct ReplaceMuxWithMerge : public OpRewritePattern<handshake::MuxOp> {

using OpRewritePattern<handshake::MuxOp>::OpRewritePattern;

LogicalResult matchAndRewrite(handshake::MuxOp muxOp,

PatternRewriter &rewriter) const override {

// Retrieve all mux inputs except the `select`

ValueRange dataOperands = muxOp.getDataOperands();

// Create a merge in the IR at the mux's position and with the same data

// inputs (or operands, in MLIR jargon)

handshake::MergeOp mergeOp =

rewriter.create<handshake::MergeOp>(muxOp.getLoc(), dataOperands);

// Make the merge part of the same basic block (BB) as the mux

inheritBB(muxOp, mergeOp);

// Retrieve the merge's output (or result, in MLIR jargon)

Value mergeResult = mergeOp.getResult();

// Replace usages of the mux's output with the new merge's output

rewriter.replaceOp(muxOp, mergeResult);

// Signal that the pattern succeeded in rewriting the mux

return success();

}

};

This rewrite pattern, called ReplaceMuxWithMerge, matches on operations of type handshake::MuxOp (the mux operation is part of the Handshake dialect) as indicated by its declaration. Eahc time the greedy pattern rewriter finds a mux in the IR, it will call the pattern’s matchAndRewrite method, providing it with the particular operation it matched on as well as with a PatternRewriter object to allow us to modify the IR. For this simple pass, we want to transform all muxes into merges so the rewrite pattern is very short:

- First, we extract the mux’s data inputs.

- Then, we create a merge operation at the same location in the IR and with the same data inputs.

- Finally, we tell the rewriter to replace the mux with the merge. This “rewires” the IR by making users of the mux’s output channel use the merge’s output channel instead, and deleted the original mux.

To complete the pass implementation, we simply have to provide the rewrite pattern to the greedy pattern rewriter. Just add the following call to patterns.add inside runDynamaticPass after declaring the pattern set.

RewritePatternSet patterns(ctx);

patterns.add<ReplaceMuxWithMerge>(ctx);

Congratulations! You have now implemented your first Dynamatic pass. We just have two simple file edits to make before we can start using it.

Adding our Pass to the Build Process

We need to make the build process aware of the new source file we just wrote. Navigate to lib/Transforms/CMakeLists.txt and add the name of thefile you created in the previous section next to other .cpp files in the add_dynamatic_library statement.

add_dynamatic_library(DynamaticTransforms

HandshakeMuxToMerge.cpp # Add this line

ArithReduceStrength.cpp

... # other .cpp files

DEPENDS

...

)

Making our Pass Visible

Finally, we need to make Dynamatic’s optimizer aware of our new pass. Navigate to include/dynamatic/Transforms/Passes.h and add the header you wrote a couple of subsections ago to the list of include files.

#ifndef DYNAMATIC_TRANSFORMS_PASSES_H

#define DYNAMATIC_TRANSFORMS_PASSES_H

#include "dynamatic/Transforms/HandshakeMuxToMerge.h" // Add this line

... // other include files

Testing our Pass

Now that the pass is part of the project’s source code, we just have to partially re-build Dynamatic to use it. Simply navigate to the top-level build directory from the terminal and run ninja.

cd build && ninja && cd ..

If you see Build successfull printed on the terminal, then everything worked and the pass is now part of Dynamatic. Let’s go modify our compilation script—which is called by the frontend’s compile command—to run it as part of the normal synthesis flow.

Open tools/dynamatic/scripts/compile.sh and locate the following call to Dynamatic’s optimizer:

# handshake transformations

"$DYNAMATIC_OPT_BIN" "$F_HANDSHAKE" \

--handshake-minimize-lsq-usage \

--handshake-concretize-index-type="width=32" \

--handshake-minimize-cst-width --handshake-optimize-bitwidths="legacy" \

--handshake-materialize --handshake-infer-basic-blocks \

> "$F_HANDSHAKE_TRANSFORMED"

exit_on_fail "Failed to apply transformations to handshake" \

"Applied transformations to handshake"

This is a compilation step where we apply a number of optimizations/transformation to our Handshake-level IR for performance and correctness, and is thus a perfect place to insert our new pass. Remember that we declared our pass in Tablegen to be associated with the --handshake-mux-to-merge optimizer flag. We just have to add the flag to the optimizer call to run our new pass.

# handshake transformations

"$DYNAMATIC_OPT_BIN" "$F_HANDSHAKE" \

--handshake-mux-to-merge \

--handshake-minimize-lsq-usage \

--handshake-concretize-index-type="width=32" \

--handshake-minimize-cst-width --handshake-optimize-bitwidths="legacy" \

--handshake-materialize --handshake-infer-basic-blocks \

> "$F_HANDSHAKE_TRANSFORMED"

exit_on_fail "Failed to apply transformations to handshake" \

"Applied transformations to handshake"

Done! Now you can re-run the same frontend script as earlier (./bin/dynamatic --run tutorials/Introduction/Ch2/loop-multiply.dyn) to see the results of your work! Note that the circuit still functionally verifies during the simulate step as the frontend prints [INFO] Simulation succeeded.

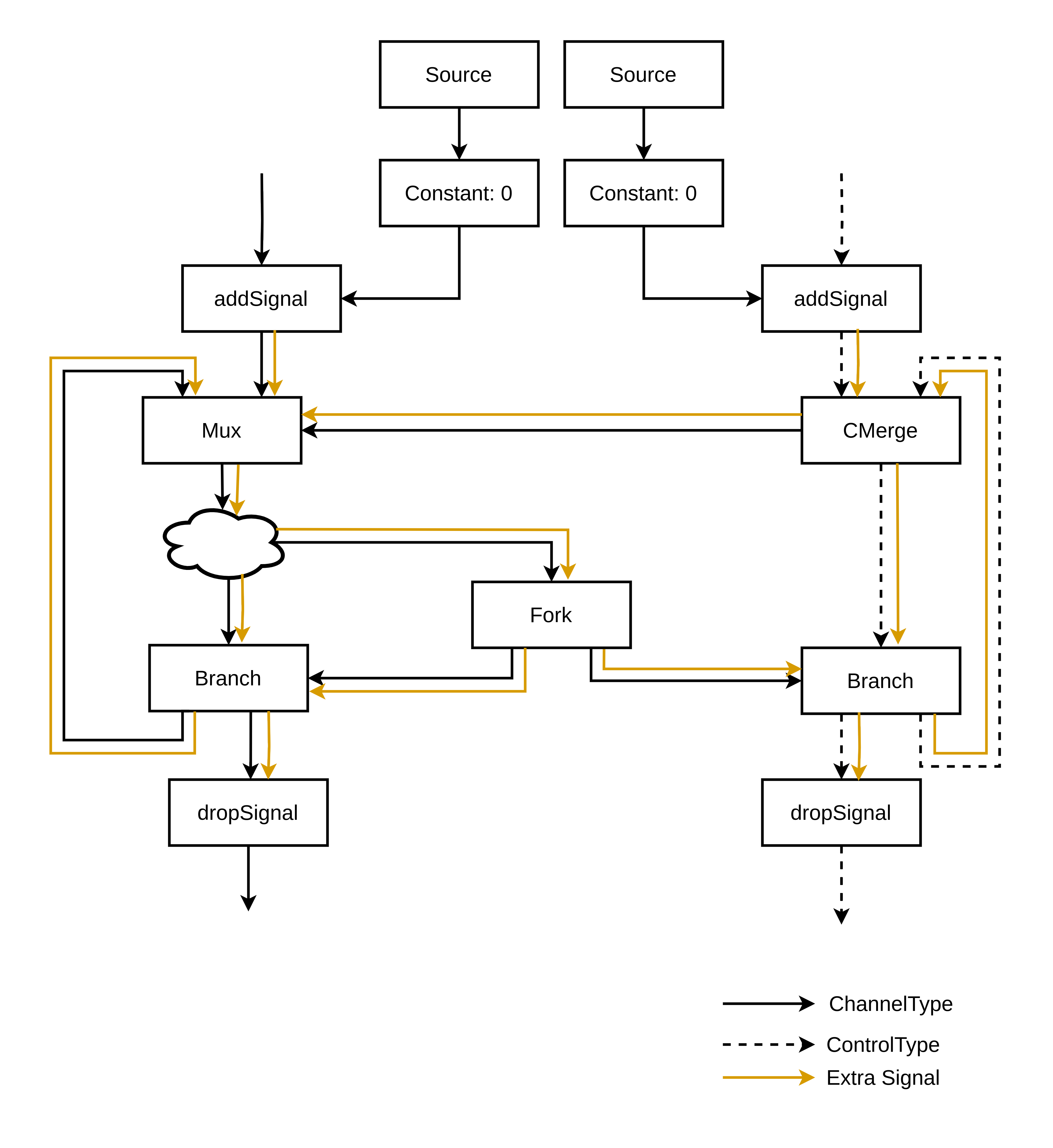

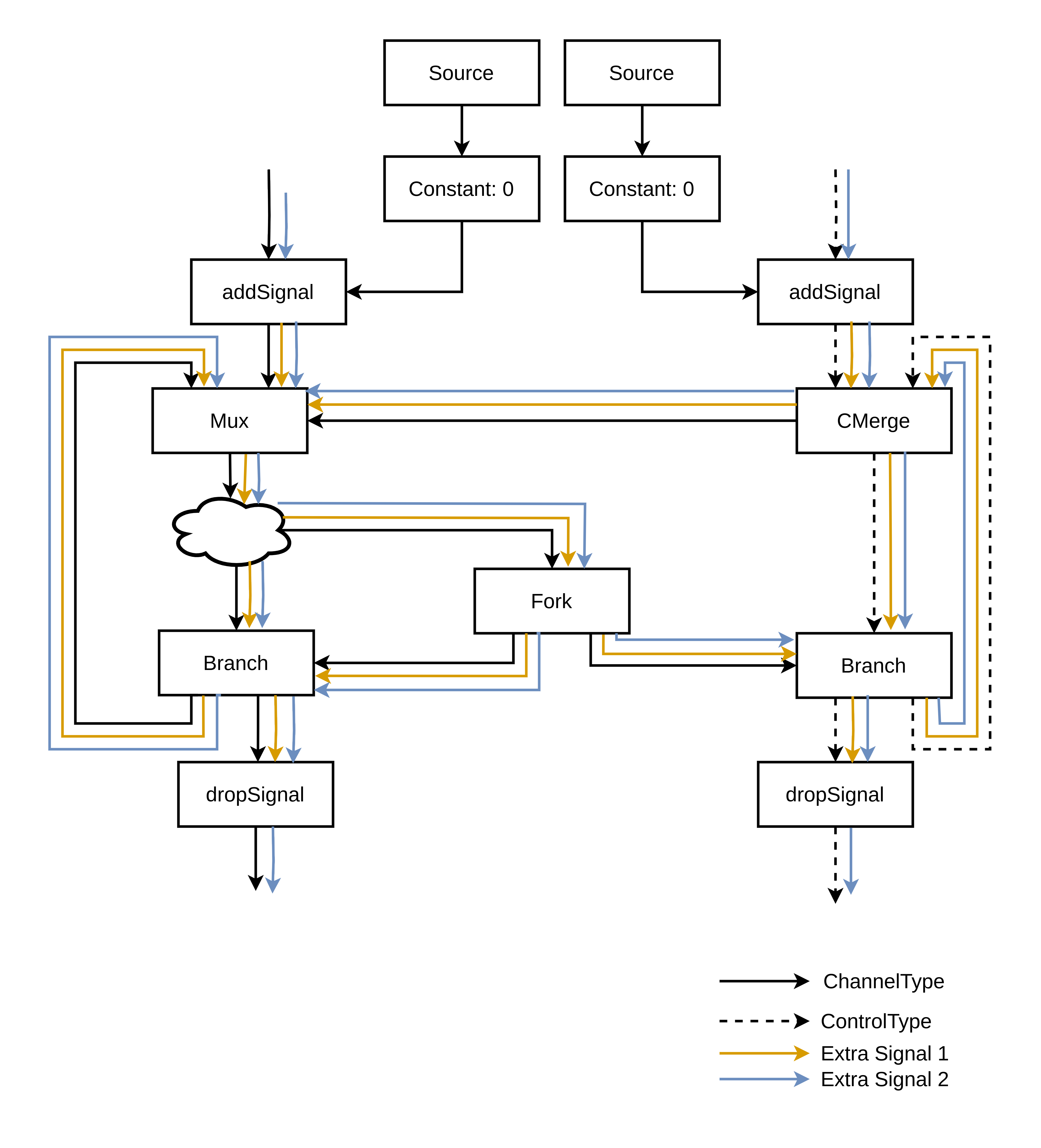

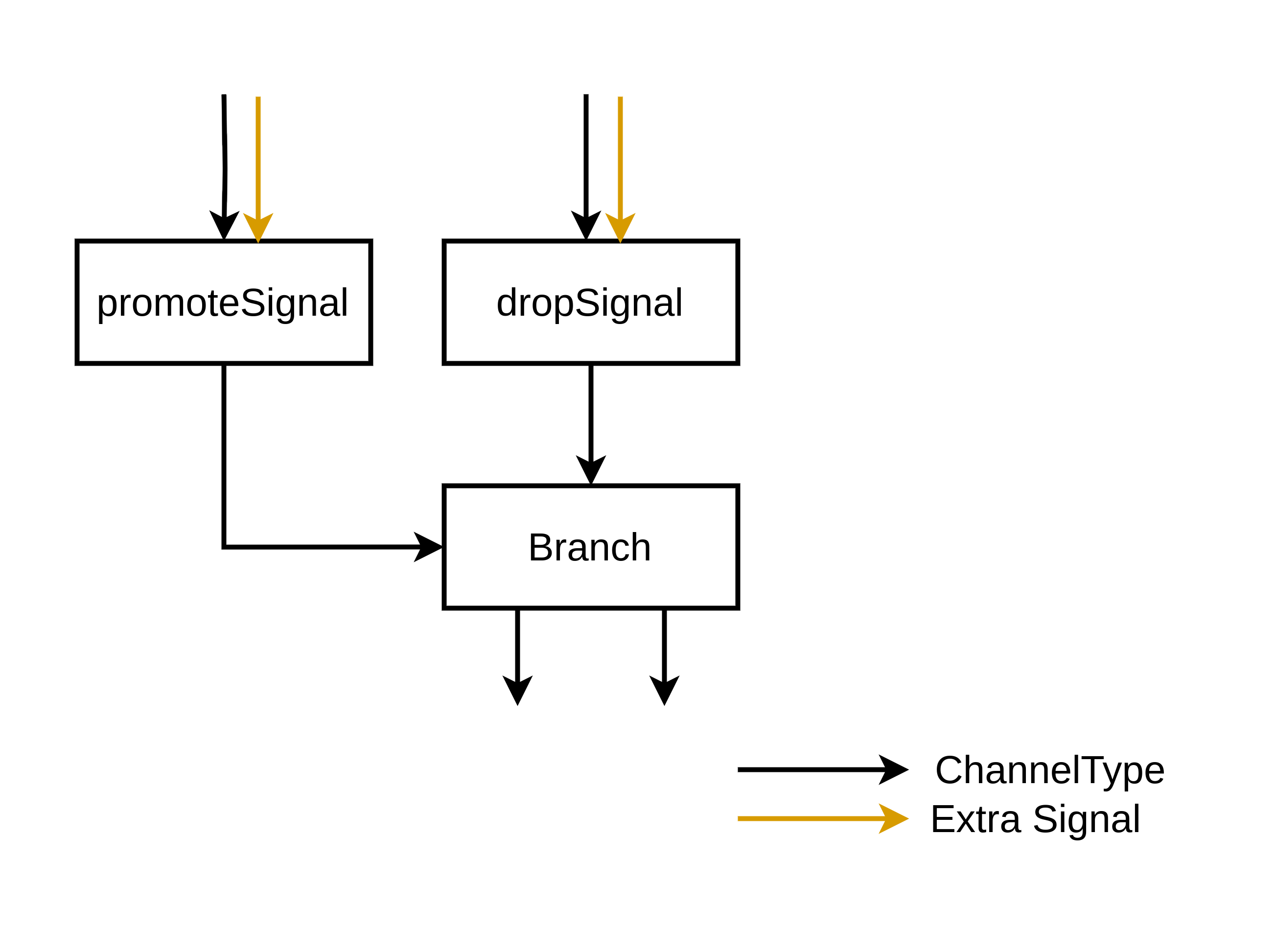

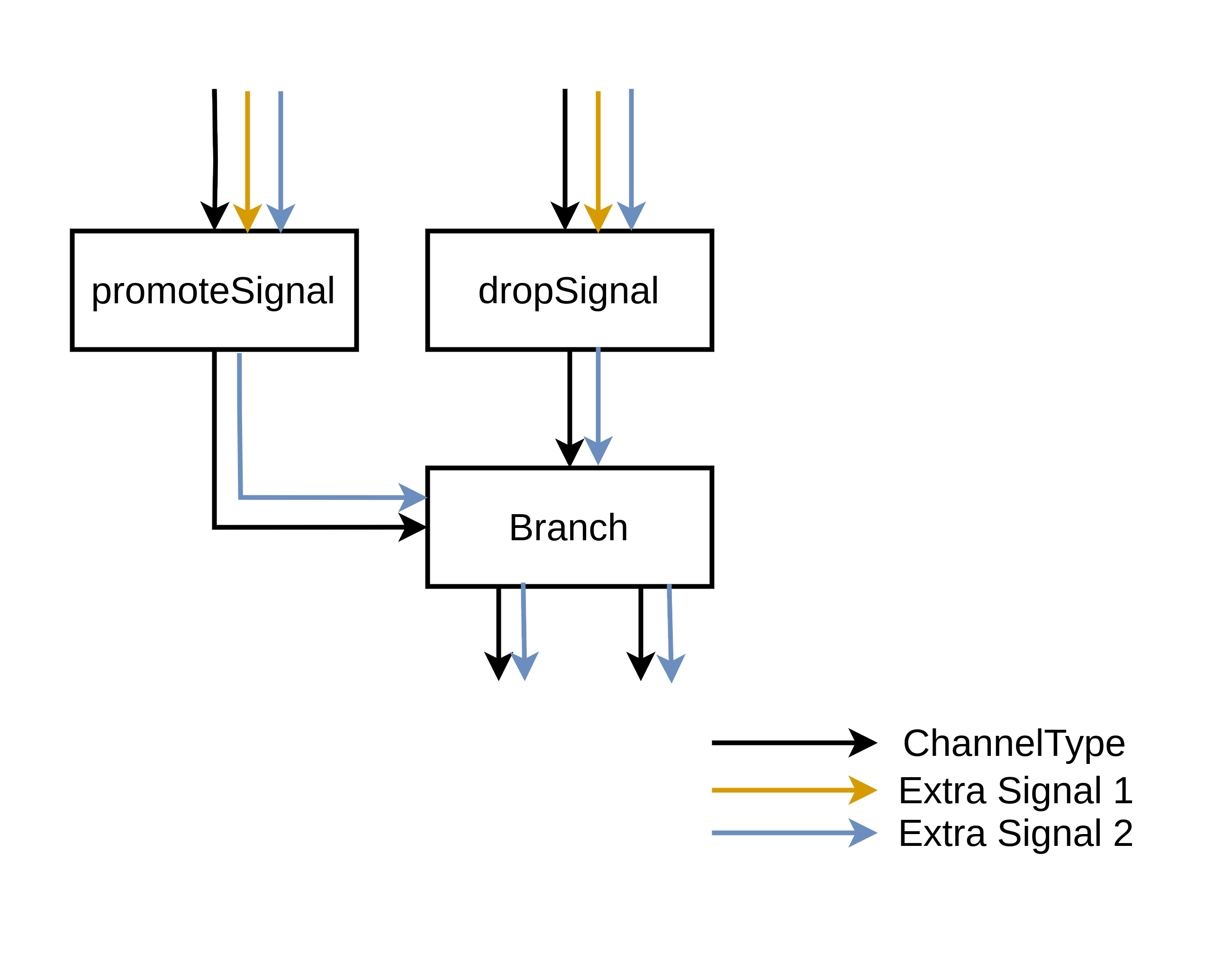

tip

Notice that all muxes have been turned into merges. Also observe that there are no control merges left in the circuit. Indeed, a control merge is just a merge with an additional index output indicating which valid data input was selected. The IR no longer uses any of these index outputs since muxes have been deleted, so Dynamatic automatically downgraded all control merges to simpler and cheaper merges to save on circuit area.

Surely this will work on all circuits, which will from now on all be smaller than before, right?

A problem, and a Solution!

Just to be sure, let’s try our optimization on a different yet similar C kernel called loop_store.

// The number of loop iterations

#define N 8

// The kernel under consideration

void loop_store(inout_int_t a[N]) {

for (unsigned i = 0; i < N; ++i) {

unsigned x = i;

if (a[i] == 0)

x = x * x;

a[i] = x;

}

}

You can find the source code of this function in tutorials/Introduction/Ch2/loop_store.c

This has the same rough structure as our previous example, except that now the kernel stores the squared iteration index in the array at each iteration where the corresponding array element is 0; otherwise it stores the index itself.

Now run the tutorials/Introduction/Ch2/loop-store.dyn frontend script. It is almost identical to the previous frontend script we used; its only difference is that it synthesizes loop_store.c instead of loop_multiply.c.

./bin/dynamatic --run tutorials/Introduction/Ch2/loop-store.dyn

Observe the frontend’s output when running simulate. You should see the following.

dynamatic> simulate

[INFO] Built kernel for IO gen.

[INFO] Ran kernel for IO gen.

[INFO] Launching Modelsim simulation

[ERROR COMPARE] Token mismatch: [0x00000000] and [0x00000001] are not equal (at transaction id 0).

[FATAL] Simulation failed

That’s bad! It means that the content of the kernel’s input array a was different after exceution of the C code and after simulation of the generated VHDL design for it. Our optimization broke something in the dataflow circuit, yielding an incorrect result.

tip

If you would like, you can make sure that it is indeed our new pass that broke the circuit by removing the --handshake-mux-to-merge flag from the compile.sh script and re-running the loop-store.dyn frontend script. You will see that the frontend prints [INFO] Sumulation succeeded instead of the failure message we just saw.

Let’s go check the simulate command’s output folder to see the content of the array a before and after the kernel. First, open the file tutorials/Introduction/Ch2/out/sim/INPUT_VECTORS/input_a.dat. This contains the initial content of array a before the kernel executes. Each line between the [[transation]] tags represent one element of the array, in order. As you can see, elements at even indices have value 0 whereas elements at odd indices have value 1.

[[[runtime]]]

[[transaction]] 0

0x00000000

0x00000001

0x00000000

0x00000001

0x00000000

0x00000001

0x00000000

0x00000001

[[/transaction]]

[[[/runtime]]]

Looking back at our C kernel, we then should expect that every element at an even index becomes the square of its index, whereas elements at at odd index become their index. This is indeed what we see in tutorials/Introduction/Ch2/out/sim/C_OUT/output_a.dat, which stores the array’s content after kernel execution.

[[[runtime]]]

[[transaction]] 0

0x00000000

0x00000001

0x00000004

0x00000003

0x00000010

0x00000005

0x00000024

0x00000007

[[/transaction]]

[[[/runtime]]]

tip

Let’s now see what the array a looks like after simulation of our dataflow circuit. Open tutorials/Introduction/Ch2/out/sim/VHDL_OUT/output_a.dat and compare it with the C output.

[[[runtime]]]

[[transaction]] 0

0x00000001

0x00000000

0x00000003

0x00000004

0x00000005

0x00000010

0x00000007

0x00000024

[[/transaction]]

[[[/runtime]]]

This is significantly different! It looks like elements are shuffled compared to the expected output, as if they were being reordered by the circuit. Let’s look at the dataflow visualizer on this new dataflow circuit and try to find out what happened.

tip

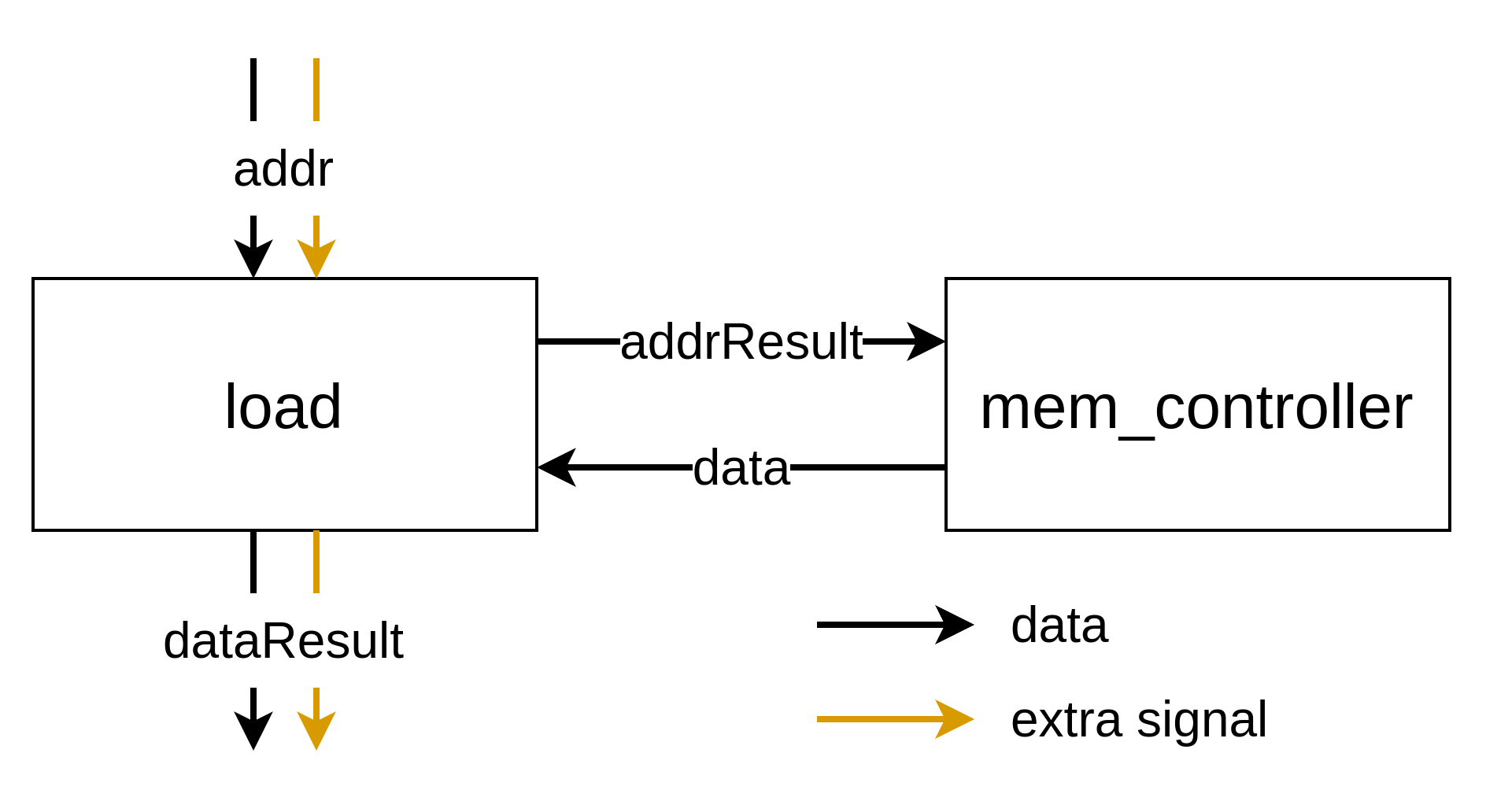

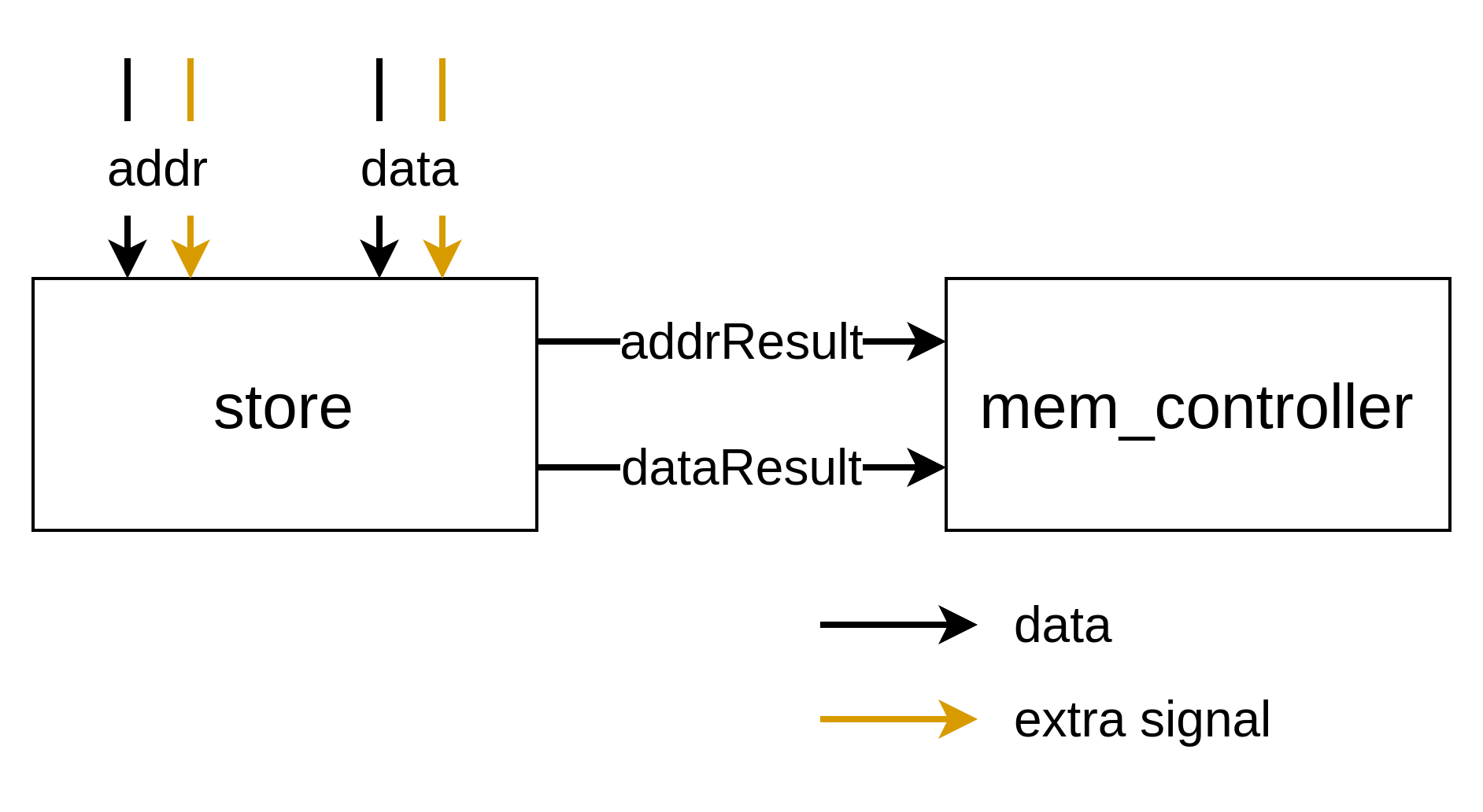

As the simulation’s output indicates, the array’s content is wrong even at the first iteration. We expect 0 to be stored in the array but instead we get a 1. To debug this problem, iterate through the simulation’s cycles and locate the first time that the store port (mc_store0) transfers a token to the memory controller (mem_controller0). Then, from the circuit’s structure, infer which input to the mc_store0 node is the store address, and which is the store data.

We are especially interested in the store’s data input, since it is the one feeding incorrect tokens into the array.

tip

Once you have identified the store’s data input and the first cycle at which it transfers a token to the memory controller, backtrack through cycles to see where the data token came from. You should notice something that should not be happening there. Remember that this is the first time the store transmits to the memory so the data token is supposed to come from the multiplier (mul1) since a[0] := 0 at the beginning. Also remember that the issue must ultimately come from a merge, since those are the only components we modified with our pass.

By replacing the mux previosuly in the place of merge10, we caused data tokens to arrive reordered at the store port, hence creating incorrect writes to memory! This is due to the fact that the loop’s throughput is much higher when the if branch is not taken, since the multiplier has a latency of 4 cycles while most of our other components have 0 sequential latency.

Let’s go verify that we are correct by modifying manually the IR that ultimately gets transformed into the dataflow circuit and re-simulating. Open the tutorials/Introduction/Ch2/out/comp/handshake_export.mlir MLIR file. It contains the last version of MLIR-formatted IR that gets transformed into a Graphviz-formatted file and then in a VHDL design. While the syntax may be a bit daunting at first, do not worry as we will only modify two lines to “revert” the transformation of the mux into merge10. The tutorial’s goal is not to teach you MLIR syntax, so we will not go into details into how the IR is formatted in text. To give you an idea, the syntax of an operation is usually as follows.

<SSA results> = <operation name> <SSA operands> {<operation attributes>} : <return types>

Back to our faulty IR; on line 31, you should see the following.

%23 = merge %22, %16 {bb = 3 : ui32, name = #handshake.name<"merge10">} : i10

As the name operation attribute indicates, this is the faulty merge10 we identified in the visualizer. Replace the entire line with an equivalent mux.

%23 = mux %muxIndex [%22, %16] {bb = 3 : ui32, name = #handshake.name<"my_mux">} : i1, i10

Before the square brackets is the mux’s select operand: %muxIndex. This SSA value currently does not exist in the IR, since it used to come from block 3’s control merge that has since then been downgraded to a simple merge due to its index output becoming unused. Let’s upgrade it again, it is located on line 40.

%32 = merge %trueResult_2, %falseResult_3 {bb = 3 : ui32, name = #handshake.name<"merge2">} : none

Replace it with

%32, %muxIndex = control_merge %trueResult_2, %falseResult_3 {bb = 3 : ui32, name = #handshake.name<"my_control_merge">} : none, i1

And you are done! For convenience we provide a little shell script that will only run the part of the synthesis flow that comes after this file is generated. It will regenerate the VHDL design from the MLIR file, simulate it, and open the visualizer. From Dynamatic’s top-level folder, run the provided shell script

./tutorials/Introduction/Ch2/partial-flow.sh

You should now see that simulation succeeds!

tip

Study the fixed circuit in the visualizer to confirm that a mux is indeed necessary to ensure proper ordering of data tokens to the store port.

Conclusion

As we just saw, our pass does not work in every situation. While it is possible to replace some muxes by merges when there is no risk of token re-ordering, this is not true in general for all merges. You would need to design a proper strategy to identify which muxes can be transformed into simpler merges and which are necessary to ensure correct circuit behavior. If you ever design such an algorithm, please consider making a pull request to Dynamatic! We accept contibutions ;)

Using Dynamatic

note

Before moving forward with this section, ensure that you have installed all necessary dependencies and built Dynamatic. If not, follow the simple build instructions.

This section covers:

- how to use Dynamatic

- constructs to include and invalid C/C++ features (see Kernel Code Guidelines)

- Dynamatic commands and respective flags.

Introduction to Dynamatic

note

The virtual machine does not contain an MILP solver (Gurobi). Unfortunately, this will affect the circuits you generate as part of the exercises and you may obtain different results from what the tutorial describes.

This tutorial guides you through the

- compilation of a simple kernel function written in C into an equivalent VHDL design

- functional verification of the resulting dataflow circuit using Modelsim

- visualization of the circuit using our custom interactive dataflow visualizer.

The tutorial assumes basic knowledge of dataflow circuits but does not require any insight into MLIR or compilers in general.

Below are some technical details about this tutorial.

- All resources are located in the repository’s tutorials/Introduction/Ch1 folder.

- All relative paths mentionned throughout the tutorial are assumed to start at Dynamatic’s top-level folder.

This tutorial is divided into the following sections:

- The Source Code | The C kernel function we will transform into a dataflow circuit.

- Using Dynamatic’s Frontend | We use the Dynamatic frontend to compile the C function into an equivalent VHDL design, and functionally verify the latter using Modelsim.

- Visualizing the Resulting Dataflow Circuit | We visualize the execution of the generated dataflow circuit on test inputs

- Conclusion | We reflect on everything we just accomplished

The C Source Code

Below is our target C function (the kernel, in Dynamic HLS jargon) for conversion into a dataflow circuit:

// The number of loop iterations

#define N 8

// The kernel under consideration

unsigned loop_multiply(int a[N]) {

unsigned x = 2;

for (unsigned i = 0; i < N; ++i) {

if (a[i] == 0)

x = x * x;

}

return x;

}

This kernel:

- multiplies a number by itself at each iteration of a loop from 0 to any number N where the corresponding element of an array equals 0.

- returns the calculated value after the loop exits.

tip

This function is purposefully simple so that it corresponds to a small dataflow circuit that will be easier to visually explore later on. Dynamatic is capable of transforming much more complex functions into fast and functional dataflow circuits.

You can find the source code of this function in tutorials/Introduction/Ch1/loop_multiply.c.

Observe!

- The

mainfunction in the file allows one to run the C kernel with user-provided arguments. - The

CALL_KERNELmacro inmain’s body calls the kernel while allowing us to automatically run code prior to and/or after the call. This is used during C/VHDL co-verification to automatically write the C function’s reference output to a file for comparison with the generated VHDL design’s output.

int main(void) {

in_int_t a[N];

// Initialize a to [0, 1, 0, 1, ...]

for (unsigned i = 0; i < N; ++i)

a[i] = i % 2;

CALL_KERNEL(loop_multiply, a);

return 0;

}

Using Dynamatic’s Frontend

Dynamatic’s frontend is built by the project in build/bin/dynamatic, with a symbolic link located at bin/dynamatic, which we will be using. In a terminal, from Dynamatic’s top-level folder, run the following:

./bin/dynamatic

This will print the frontend’s header and display a prompt where you can start inputting commands.

================================================================================

============== Dynamatic | Dynamic High-Level Synthesis Compiler ===============

======================== EPFL-LAP - v2.0.0 | March 2024 ========================

================================================================================

dynamatic> # Input your command here

set-src

Provide Dynamatic with the path to the C source code file under consideration. Ours is located at tutorials/Introduction/Ch1/loop_multiply.c, thus we input:

dynamatic> set-src tutorials/Introduction/Ch1/loop_multiply.c

note

The frontend will assume that the C function to transform has the same name as the last component of the argument to set-src without the file extension, here loop_multiply.

compile

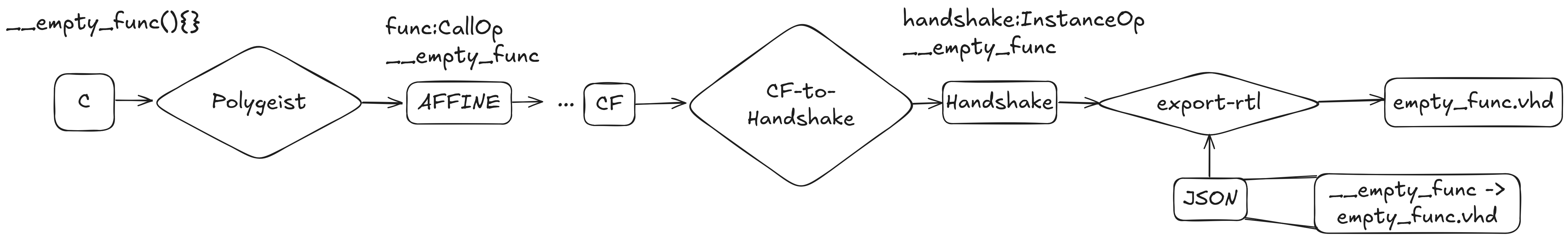

The first step towards generating the VHDL design is compilation. Here,

- the C source goes through our MLIR frontend (Polygeist)

- traverses a pre-defined sequence of transformation and optimization passes that ultimately yield a description of an equivalent dataflow circuit.

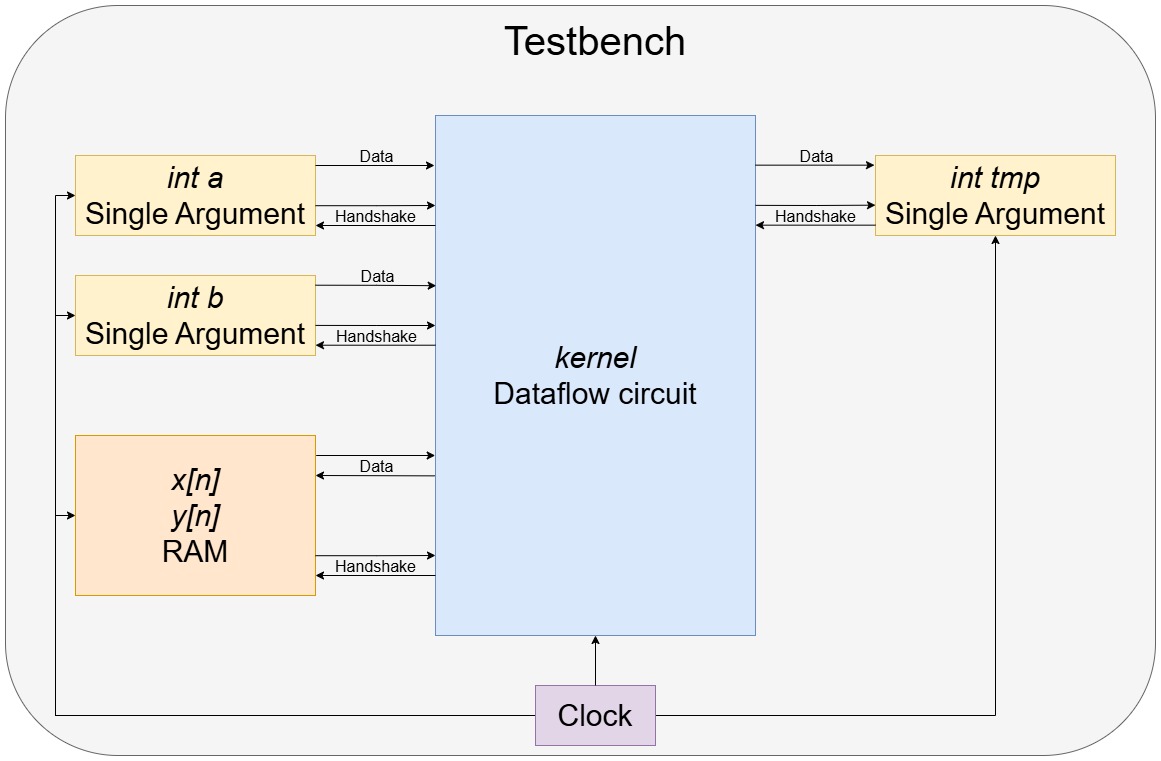

That description takes the form of a human-readable and machine-parsable IR (Intermediate Representation) within the MLIR framework. It represents dataflow components using specially-defined IR instructions (in MLIR jargon, operations) that are part of the Handshake dialect.

tip

A dialect is simply a collection of logically-connected IR entities like instructions, types, and attributes.

MLIR provides standard dialects for common usecases, while allowing external tools (like Dynamatic) to define custom dialects to model domain-specific semantics.

To compile the C function, simply input compile. This will call a shell script compile.sh (located at tools/dynamatic/scripts/compile.sh) in the background that will iteratively transform the IR into an optimized dataflow circuit, storing intermediate IR forms to disk at multiple points in the process.

dynamatic> set-src tutorials/Introduction/Ch1/loop_multiply.c

dynamatic> compile

Compile Flags

The compile flags are all optional and defaulted to no value.

--sharing enables credit-based resource sharing

--buffer-algorithm lets the compiler know which smart buffer placement algorithm to use. Requires Gurobi to solve MILP problems. There are two available options for this flag:

- fpga20: throughput-driven buffering

- fpl22 : throughput- and timing-driven buffering

The default for compile is to use the minimum buffering for correctness (simple buffer placement)

| flag | function | options |

|---|---|---|

| –sharing | use credit-based resource shaing | None |

| –buffer-alogithm | Indicate buffer placement algorithm to use, values are ‘on merges’ | fpga20, fpl22 |

warning

compile requires a MILP solver (Gurobi) for smart buffer placement. If you don’t have Gurobi, abstain from using the --buffer-algorithm flag

You should see the following printed on the terminal after running compile:

...

dynamatic> compile

[INFO] Compiled source to affine

[INFO] Ran memory analysis

[INFO] Compiled affine to scf

[INFO] Compiled scf to cf

[INFO] Applied standard transformations to cf

[INFO] Applied Dynamatic transformations to cf

[INFO] Compiled cf to handshake

[INFO] Applied transformations to handshake

[INFO] Running simple buffer placement (on-merges).

[INFO] Placed simple buffers

[INFO] Canonicalized handshake

[INFO] Created loop_multiply DOT

[INFO] Converted loop_multiply DOT to PNG

[INFO] Created loop_multiply_CFG DOT

[INFO] Converted loop_multiply_CFG DOT to PNG

[INFO] Lowered to HW

[INFO] Compilation succeeded

After successful compilation, all results are placed in a folder named out/comp created next to the C source file under consideration. In this case, it is located at tutorials/Introduction/Ch1/out/comp. It is not necessary that you look inside this folder for this tutorial.

note

A DOT file and equivalent PNG of the resulting circuit is generated after compilation (kernel_name.dot and kernel_name.png) and can be visualized using a DOT file reader or image viewer without installing the interactive visualizer.

In addition to the final optimized version of the IR (in tutorials/Introduction/Ch1/out/comp/handshake_export.mlir), the compilation script generates an equivalent Graphviz-formatted file (tutorials/Introduction/Ch1/out/comp/loop_multiply.dot) which serves as input to our VHDL backend, which we call using the write-hdl command.

write-hdl

This command converts the .dot file generated from compilation to the equivalent hardware description language implementation of our kernel.

...

[INFO] Compilation succeeded

dynamatic> write-hdl

[INFO] Exported RTL (vhdl)

[INFO] HDL generation succeeded

note

By default, the command generates VHDL implementations. This can be changed to verilog using the --hdl flag with the value verilog

Similarly to compile, this creates a folder out/hdl with a loop_multiply.vhd file and all other .vhd files necessary for correct functioning of the circuit. This design can finally be co-simulated along the C function on Modelsim to verify that their behavior matches using the simulate command.

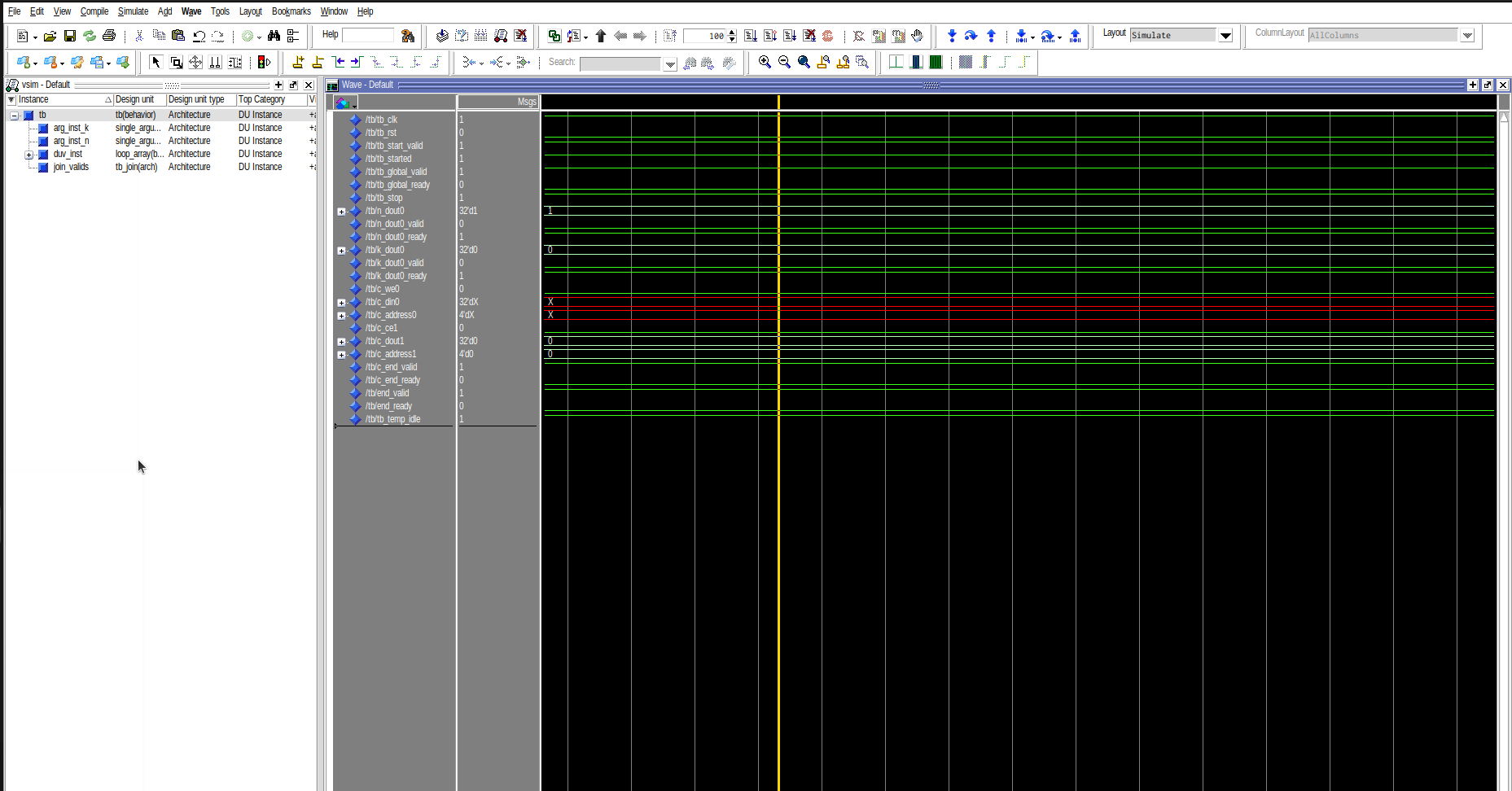

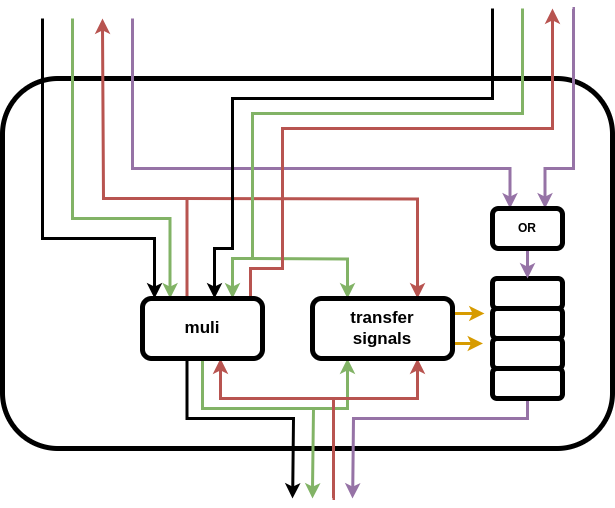

simulate

This command generates a testbench from the generated HDL code and feeds it inputs from the main function of our C code. It then runs a cosimulation of the C program and VHDL testbench to determine whether they yield the same results.

...

[INFO] HDL generation succeeded

dynamatic> simulate

[INFO] Built kernel for IO gen.

[INFO] Ran kernel for IO gen.

[INFO] Launching Modelsim simulation

[INFO] Simulation succeeded

The command creates a new folder out/sim. In this case, it is located at tutorials/Introduction/Ch1/out/sim. While it is not necessary that you look inside this folder for this tutorial, just know that it contains everything necessary to co-simulate the design:

- input C function

- VHDL entity values

- auto-generated testbench

- full implementation of all dataflow components, etc.

- everything generated by the co-simulation process (output C function and VHDL entitiy values, VHDL compilation logs, full waveform).

[INFO] Simulation succeeded indicates that the C function and VHDL design showcased the same behavior. This just means that

- their return values were the same after execution on kernel inputs computed in the

mainfunction. - if any arguments were pointers to memory regions,

simulatealso checked that the states of these memories are the same after the C kernel call and VHDL simulation.

That’s it, you have successfully synthesized your first dataflow circuit from C code and functionally verified it using Dynamatic!

At this point, you can quit the Dynamatic frontend by inputting the exit command:

...

[INFO] Simulation succeeded

dynamatic> exit

Goodbye!

If you would like to re-run these commands all at once, it is possible to use the frontend in a non-interactive way by writing the sequence of commands you would like to run in a file and referencing it when launching the frontend. One such file has already been created for you at tutorials/Introduction/Ch1/frontend-script.dyn. You can replay this whole section by running the following from Dynamatic’s top-level folder.

./bin/dynamatic --run tutorials/Introduction/Ch1/frontend-script.dyn

visualize

note

To use the visualize command, you will need to go through the interactive dataflow visualizer section in the Advanced Build section first.

At the end of the last section, you used the simulate command to co-simulate the VHDL design obtained from the compilation flow along with the C source. This process generated a waveform file at tutorials/Introduction/Ch1/out/sim/HLS_VERIFY/vsim.wlf containing all state transitions that happened during simulation for all signals. After a simple pre-processing step we will be able to visualize these transitions on a graphical representation of our circuit to get more insights into how our dataflow circuit behaves.

To launch the visualizer, re-open the frontend, re-set the source with set-src tutorials/Introduction/Ch1/loop_multiply.c, and input the visualize command.

$ ./bin/dynamatic

================================================================================

============== Dynamatic | Dynamic High-Level Synthesis Compiler ===============

==================== EPFL-LAP - <release> | <release-date> =====================

================================================================================

dynamatic> set-src tutorials/Introduction/Ch1/loop_multiply.c

dynamatic> visualize

[INFO] Generated channel changes

[INFO] Added positioning info. to DOT

dynamatic> exit

Goodbye!

tip

We do not have to re-run the previous synthesis steps because the data expected by the visualize command is still present on disk in the output folders generated by compile and simulate.

visualize creates a folder out/visual next to the source file (in tutorials/Introduction/Ch1/out/visual) containing the data expected by the visualizer as input.

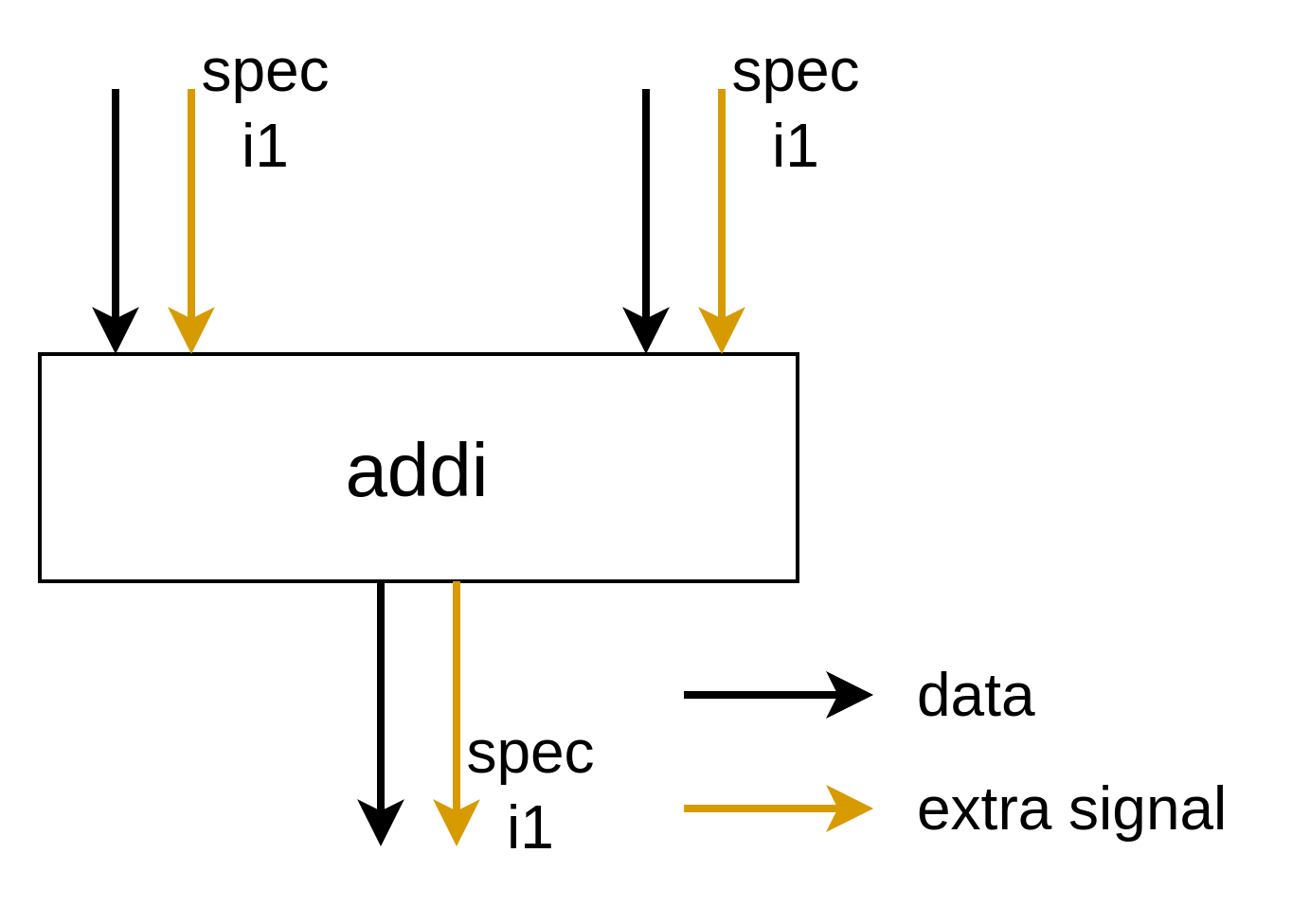

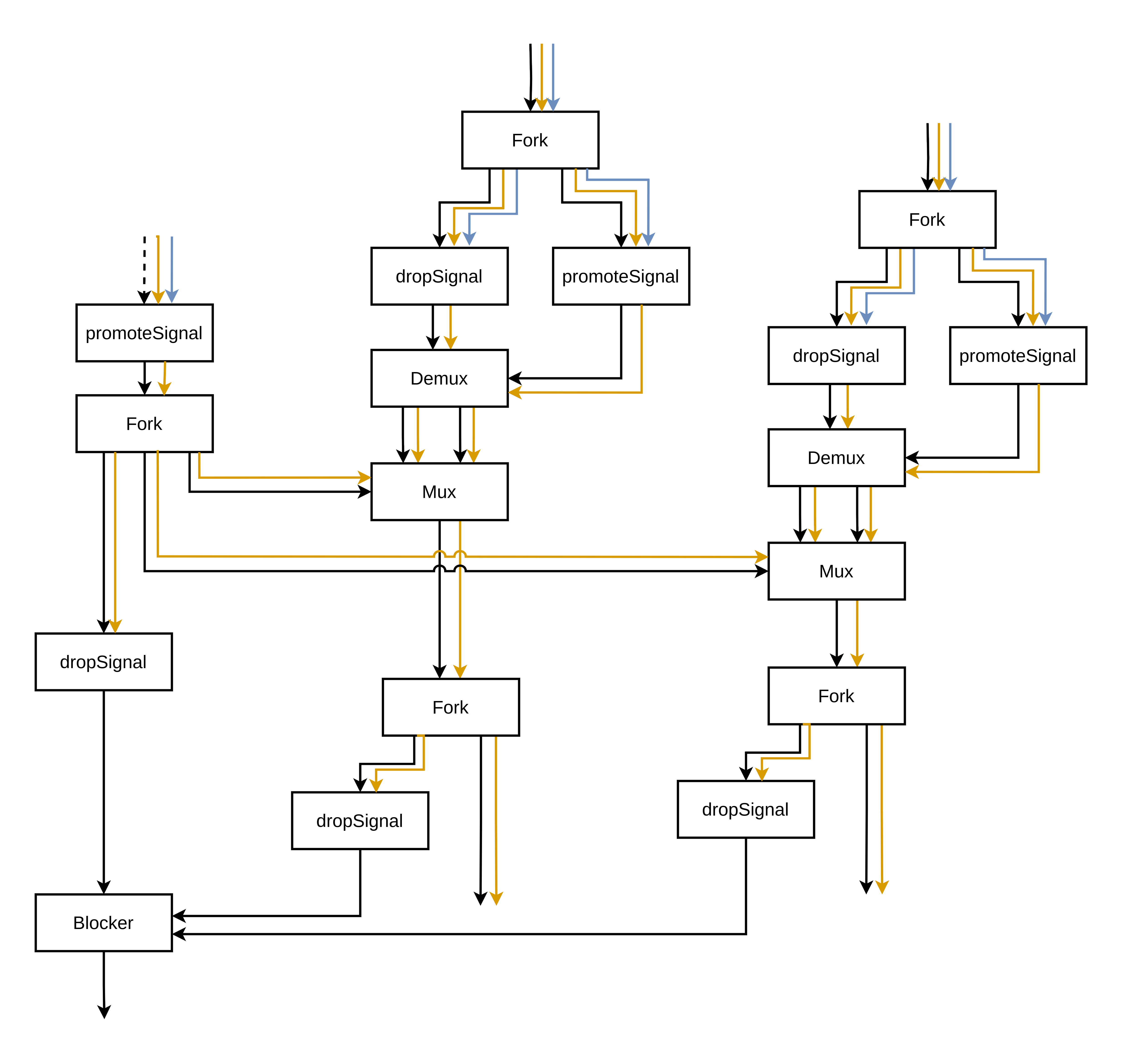

You should now see a visual representation of the dataflow circuit you just synthesized. It is basically a graph, where each node represents some kind of dataflow component and each directed edge represents a dataflow channel, which is a combination of two 1-bit signals and of an optional bus:

- A

validwire, going in the same direction as the edge (downstream). - A

readywire, going in the opposite direction as the edge (upstream). - An optional

databus of arbitrary width, going downstream. We display channels without a data bus (which we often refer to as control-only channels) as dashed.

During execution of the circuit, each combination of the valid/ready wires (a channel’s dataflow state) maps to a different color. You can see this mapping by clicking the Legend button on the top-right corner of the window. You can also change the mapping by clicking each individual color box and selecting a different color. There are 4 possible dataflow states.

Idle(valid=0,ready=0): the producer does not have a valid token to put on the channel, and the consumer is not ready to consume it. Nothing is happening, the channel is idle.Accept(valid=0,ready=1): the consumer is ready to consume a token, but the producer does not have a valid token to put on the channel. The channel is ready to accept a token.Stall(valid=1,ready=0): the producer has put a valid token on the channel, but the consumer is not ready to consume it. The token is stalled.Transfer(valid=1,ready=1): the producer has put a valid token on the channel which the consumer is ready to consume. The token is transferred.

The nodes each have a unique name inherited from the MLIR-formatted IR that was used to generate the input DOT file to begin with, and are grouped together based on the basic block they belong to. These are the same basic blocks used to represent control-free sequences of instructions in classical compilers. In this example, the original source code had 5 basic blocks, which are transcribed here in 5 labeled rectangular boxes.

tip

Two of these basic blocks represent the start and end of the kernel before and after the loop, respectively. The other 3 hold the loop’s logic. Try to identify which is which from the nature of the nodes and from their connections. Consider that the loop may have been slightly transformed by Dynamatic to optimize the resulting circuit.

There are several interactive elements at the bottom of the window that you can play with to see data flow through the circuit.

- The horizontal bar spanning the entire window’s width is a timeline. Clicking or dragging on it will let you go forward or backward in time.

- The

Playbutton will iterate forward in time at a rate of one cycle per second when clicked. Cliking it again will pause the iteration. - As their name indicates,

Prev cycleandNext cyclewill move backward or forward in time by one cycle, respectively. - The

Cycle:textbox lets you enter a cycle number directly, which the visualizer then jumps to.

tip

Observe the circuit executes using the interactive controls at the bottom of the window. On cycle 6, for example, you can see that tokens are transferred on both input channels of muli0 in block2. Try to infer the multiplier’s latency by looking at its output channel in the next execution cycles. Then, try to track that output token through the circuit to see where it can end up. Study the execution till you get an understanding of how tokens flow inside the loop and of how the conditional multiplication influences the latency of each loop iteration.

Conclusion

Congratulations on reaching the end of this tutorial! You now know how to use Dynamatic to compile C kernels into functional dataflow circuits, visualize these circuits to better understand them to identify potential optimization opportunities.

Before moving on to use Dynamatic for your custom programs, kindly refer to the Kernel Code Guidelines guide. You can also view a more detailed example that uses some of the optional commands not mentioned in this introductory tutorial.

We are now ready for an introduction to modiying Dynamatic. We will identify an optimization opportunity from the previous example and write a small transformation pass in C++ to implement our desired optimization, before finally verifying its behavior using the dataflow visualizer.

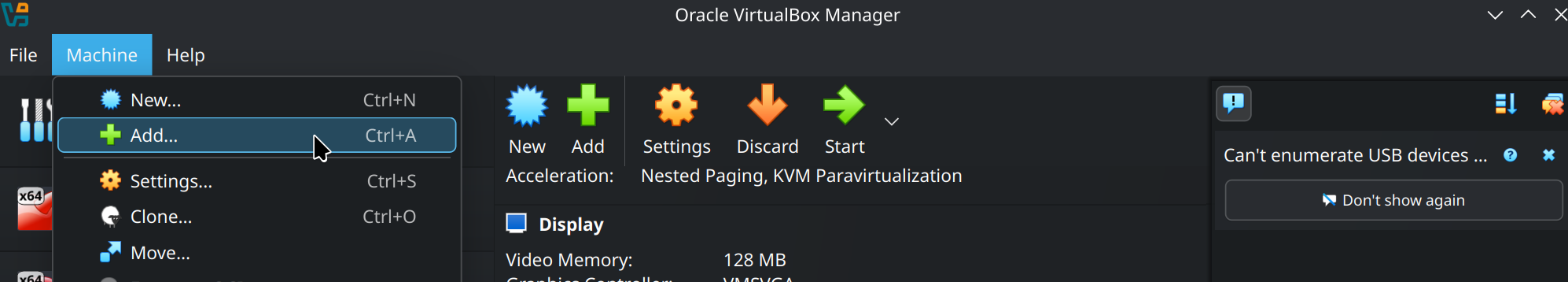

VM Setup Instructions

We provide a virtual machine (VM) which contains a pre-built/ready-to-use version of our entire toolchain except for Modelsim/Questa which the users must install themselves after setting up the VM. It is very easy to set up on your machine using VirtualBox. You can download the VM image here. The Dynamatic virtual machine is compatible with VirtualBox 5.2 or higher.

This VM was originally set-up for the Dynamatic Reloaded tutorial given at the FPGA’24 conference in Monterey, California. You can use it to simply follow the tutorial (available in the repository’s documentation) or as a starting point to use/modify Dynamatic in general.

Running the VM

Once you have downloaded the .zip archive from the link above, you can extract it and inside you will see two files The .vbox file contains all the settings required to run the VM, while the .vdi file contains the virtual hard drive. To load the VM, open VirtualBox and click on Machine - Add, then select the file DynamaticVM.vbox when prompted.

Then, you can run it by either clicking Start or simply double-clicking the virtual machine in the sidebar.

Inside the VM

If everything went well, after launching the image you should see Ubuntu’s splash screen and be dropped into the desktop directly. Below are some important things about the guest OS running on the VM.

- The VM runs Ubuntu 20.04 LTS. Any kind of “system/program error” reported by Ubuntu can safely be dismissed or ignored.

- The user on the VM is called dynamatic. The password is also dynamatic.

- On the left bar you have icons corresponding to a file explorer, a terminal, a web browser (Firefox).

- There are a couple default Ubuntu settings you may want to modify for your convenience. You can open Ubuntu settings by clicking the three icons at the top right of the Ubuntu desktop and then selecting Settings.

- You can change the default display resolution (1920x1080) by clicking on the Displays tab on the left, then selecting another resolution in the Resolution dropdown menu.

- You can change the default keyboard layout (English US) by clicking on the Keyboard tab on the left. Next, click on the + button under Input Sources, then, in the pop-menu that appears, click on the three vertical dots icon, scroll down the list, and click Other. Find your keyboard layout in the list and double-click it to add it to the list of input sources. Finally, drag your newly added keyboard layout above English (US) to start using it.

- When running commands for Dynamatic from the terminal, make sure you first

cdto thedynamaticsubfolder.- Since the user is also called dynamatic,

pwdshould display/home/dynamatic/dynamaticwhen you are in the correct folder.

- Since the user is also called dynamatic,

Advanced Build Instructions

Table of contents

- Gurobi

- Cloning

- Building

- Interactive Visualizer

- Enabling XLS Integration

- Modelsim/Questa sim installation

note

This document contains advanced build instructions targeted at users who would like to modify Dynamatic’s build process and/or use the interactive dataflow circuit visualizer. For basic setup instructions, see the installation page.

1. Gurobi

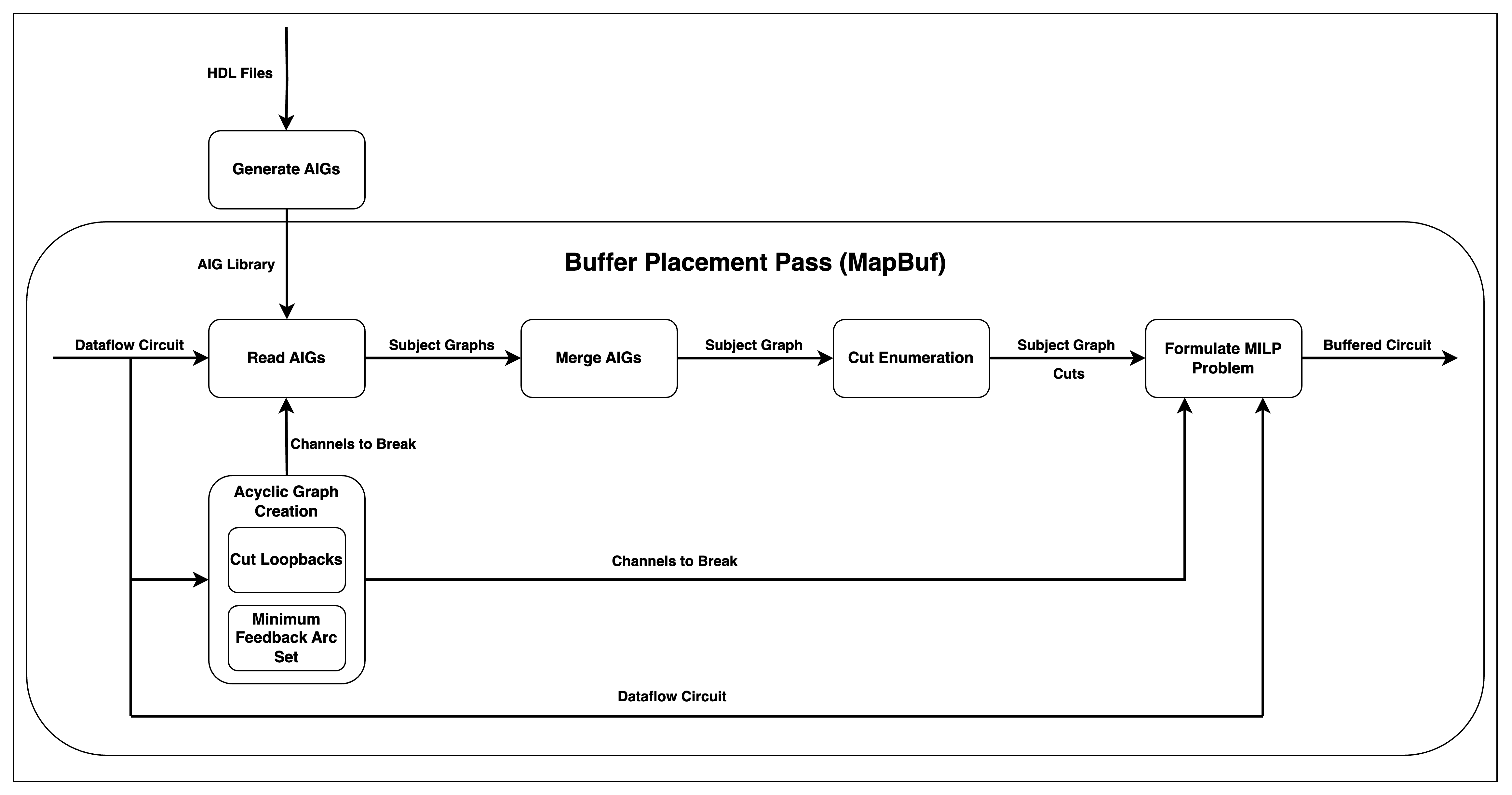

Why Do We Need Gurobi?

Currently, Dynamatic relies on Gurobi to solve performance-related optimization problems (MILP). Dynamatic is still functional without Gurobi, but the resulting circuits often fail to achieve acceptable performance.

Download Gurobi

Gurobi is available for Linux here (log in required). The resulting downloaded file will be gurobiXX.X.X_linux64.tar.gz

Obtain a License

Free academic licenses for Gurobi are available here.

Installation

To install Gurobi, first extract your downloaded file to your desired installation directory. We recommend to place this in /opt/, e.g. /opt/gurobiXXXX/linux64/ (with XXXX as the downloaded version). If extraction fails, try with sudo.

Use the following command to pass your obtained license to Gurobi, which it stores in ~/gurobi.lic

# Replace x's with obtained license

/opt/gurobiXXXX/linux64/bin/grbgetkey xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

note

If you chose a web library (WLS license), copy the gurobi.lic file provided to your home directory rather than running the command above

Configuring Your Environment

In addition to adding Gurobi to your path, Dynamatic’s CMake requires the GUROBI_HOME environment variable to find headers and libraries. These lines can be added to your shell initiation script, e.g. ~/.bashrc or ~/.zshrc, or used with any other environment setup method.

# Replace "gurobiXXXX" with the correct version

export GUROBI_HOME="/opt/gurobiXXXX/linux64"

export PATH="${GUROBI_HOME}/bin:${PATH}"

export LD_LIBRARY_PATH="${GUROBI_HOME}/lib:$LD_LIBRARY_PATH"

Once Gurobi is set up, you can change the buffer placement algorithm using the --buffer-algorithm compile flag and setting the value to either fpga20 or fpl22. See Using Dynamatic page for details on how to use Dynamatic and modify the compile flags.

2. Cloning

The repository is set up so that LLVM is shallow cloned by default, meaning the clone command downloads just enough of them to check out currently specified commits. If you wish to work with the full history of these repositories, you can manually unshallow them after cloning.

For LLVM:

cd dynamatic/llvm-project

git fetch --unshallow

3. Building

This section provides some insights into our custom build script, build.sh, located in the repository’s top-level folder. The script recognizes a number of flags and arguments that allow you to customize the build process to your needs. The –help flag makes the script print the entire list of available flags/arguments and exit.

note

The script should always be ran from Dynamatic’s top-level folder.

General Behavior

The build script successively builds all parts of the project using CMake and Ninja. In order, it builds

- LLVM (with MLIR and clang as additional tools),

- Dynamatic, and

- (optionally) the interactive dataflow circuit visualizer (see instructions below).

It creates build folders in the top level directory and in each submodule to run the build tasks from. All files generated during build (libraries, executable binaries, intermediate compilation files) are placed in these folders, which the repository is configured to not track. Additionally, the build script creates a bin folder in the top-level directory that contains symbolic links to a number of executable binaries built by the superproject and subprojects that Dynamatic users may especially care about.

Debug or Release Mode

The build script builds the entire project in Debug mode by default, which enables assertions in the code and gives you access to runtime debug information that is very useful when working on Dynamatic’s code. However, Debug mode increases build time and (especially) build size (the project takes around 60GB once fully built). If you do not care for runtime debug information and/or want Dynamatic to have a smaller footprint on your disk, you can instead build Dynamatic in Release mode by using the --release flag when running the build script.

# Build Dynamatic in Debug mode

./build.sh

# Build Dynamatic in Release mode

./build.sh --release

Multi-Threaded Builds

By default, Ninja builds the project by concurrently using at most one thread per logical core on your machine. This can put a lot of strain on your system’s CPU and RAM, preventing you from using other applications smoothly. You can customize the maximum number of concurrent threads that are used to build the project using the –threads argument.

# Build using at most one thread per logical core on your machine

./build.sh

# Build using at most 4 concurrent threads

./build.sh --threads 4

It is also common to run out of RAM especially during linking of LLVM/MLIR. If this is a problem, consider limiting the maximum number of parallel LLVM link jobs to one per 15GB of available RAM, using the –llvm-parallel-link-jobs flag:

# Perform at most 1 concurrent LLVM link jobs

./build.sh --llvm-parallel-link-jobs 1

note

This flag defaults to a value of 2

Dockerfile

Dynamatic includes a Dockerfile that configures all the open-source dependencies. To build the dockerfile, run the following command in the root directory of Dynamatic:

$ docker build -t dynamatic-image . --build-arg UID=$(id -u) --build-arg GID=$(id -g)

To launch the Docker container, run the following command in the root directory of dynamatic:

$ docker run -it -u $(id -u):$(id -g) -v "$(pwd):/home/ubuntu/dynamatic" -w "/home/ubuntu/dynamatic" dynamatic-image /bin/bash

which will launch the Docker container and mount the current dynamatic directory in /home/ubuntu/dynamatic.

Then you can proceed with building and running Dynamatic, for example:

$ cd /home/ubuntu/dynamatic

# Build Dynamatic using the prebuilt LLVM and enable CBC-related features.

$ bash build.sh --use-prebuilt-llvm --enable-cbc

note

We mount Dynamatic in the container since if we build it inside the docker image, it losses the states after shutting down.

Forcing CMake Re-Configuration

To reduce the build script’s execution time when re-building the project regularly (which happens during active development), the script does not try to fully reconfigure each submodule or the superproject using CMake if it sees that a CMake cache is already present on your filesystem for each part. This can cause problems if you suddenly decide to change build flags that affect the CMake configuration (e.g., when going from a Debug build to a Release build) as the CMake configuration will not take into account the new configuration. Whenever that happens (or whenever in doubt), provide the --force flag to force the build script to re-configure each part of the project using CMake.

# Force re-configuration of every submodule and the superproject

./build.sh --force

tip

If the CMake configuration of each submodule and of the superproject has not changed since the last build script’s invocation and the –force flag is provided, the script will just take around half a minute more to run than normal but will not fully re-build everything. Therefore it is safe and not too inconvenient to specify the --force flag on every invocation of the script.

Enable Cbc MILP Solver

If you have difficulty installing the Gurobi solver or getting a license, you may use the open-source alternative Cbc solver instead:

sudo apt-get install coinor-cbc

# Build Dynamatic with Cbc enabled.

./build.sh --enable-cbc

Enable Legacy Chisel-Based LSQ Generator

Dynamatic previously uses RTL generators written in Chisel (a hardware construction language embedded in the high-level programming language Scala) to produce synthesizable RTL designs. You can install JDK and Scala using the recommended way with the following commands:

sudo apt-get install -y openjdk-21-jdk

curl -fL https://github.com/coursier/coursier/releases/latest/download/cs-x86_64-pc-linux.gz | gzip -d > cs && chmod +x cs && ./cs setup

To build dynamatic with legacy LSQ enabled, append the following flag when

calling the build.sh script:

./build.sh --build-legacy-lsq

4. Interactive Dataflow Circuit Visualizer

The repository contains an optionally built tool that allows you to visualize the dataflow circuits produced by Dynamatic and interact with them as they are simulated on test inputs. This is a very useful tool for debugging and for better understanding dataflow circuits in general. It is built on top of the open-source Godot game engine and of its C++ bindings, the latter of which Dynamatic depends on as a submodule rooted at visual-dataflow/godot-cpp (relative to Dynamatic’s top-level folder). To build and/or modify this tool (which is only supported on Linux at this point), one must therefore download the Godot engine (a single executable file) from the Internet manually.

note

Godot’s C++ bindings only work for a specific major/minor version of the engine. This version is specified in the branch field of the submodule’s declaration in .gitmodules. The version of the engine you download must therefore match the bindings currently tracked by Dynamatic. You can download any version of Godot from the official archive.

Due to these extra dependencies, building this tool is opt-in, meaning that

- by default it is not built along the rest of Dynamatic.

- the CMakeLists.txt file in visual-dataflow/ is meant to be configured independently from the one located one folder above it i.e., at the project’s root. As a consequence, intermediate build files for the tool are dumped into the

visual-dataflow/build/folder instead of the top-levelbuild/folder.

Building an executable binary for the interactive dataflow circuit visualizer is a two-step process, one which is automated and one which still requires some manual work detailed below.

- Build the C++ shared library that the Godot project uses to get access to Dynamatic’s API. The

--visual-dataflowbuild script flag performs this task automatically.

# Build the C++ library needed by the dataflow visualizer along the rest of Dynamatic

./build.sh --visual-dataflow

At this point, it becomes possible to open the Godot project (in the /dynamatic/visual-dataflow directory) in the Godot editor and modify/run it from there. Run your downloaded Godot file and open the project in the visual data-flow directory.

- export the Godot project as an executable binary to be able to run it from outside the editor. In addition to having downloaded the Godot engine, at the moment this also requires that the project has been exported manually once from the Godot editor. The Godot documentation details the process here, which you only need to follow up to and including the part where it asks you to download export templates using the graphical interface. Once they are downloaded for your specific export target, you are now able to automatically build the tool by using the

--export-godotbuild script argument and specifying the path to the Godot engine executable you downloaded.

Quick Steps From Godot Tutorial

- Download Godot

- Build Dynamatic with

--visual-dataflowflag - Run Godot (from the directory to which it was downloaded)

- Click

Editorin the top navigation bar and selectManage Export Templates - Click

Onlinebutton, download and Install Export Templates - Click

Projectbutton at top left of editor and select Export - Click the

Export PCK/ZIP...enter a name for your export and validate it

For more details, visit official godot engine website.

Finally, run the command below to export the Godot project as an executable binary that will be accessed by Dynamatic

# Export the Godot project as an executable binary

# Here it is a good idea to also provide the --visual-dataflow flag to ensure

# that the C++ library needed by the dataflow visualizer is up-to-date

./build.sh --visual-dataflow --export-godot /path/to/godot-engine

The tool’s binary is generated at visual-dataflow/bin/visual-dataflow and sym-linked at bin/visual-dataflow for convenience.

Now, you can visualize the dataflow graphs for your compiled programs with Godot. See how to use Dynamatic for more details.

note

Whenever you make a modification to the C++ library or to the Godot project itself, you can simply re-run the above command to recompile everything and re-generate the executable binary for the tool.

5. Enabling the XLS Integration

The experimental integration with the XLS HLS tool (see here for more information) can be enabled by providing the --experimental-enable-xls flag to build.sh.

note

--experimental-enable-xls, just like any other cmake-related flags, will only be applied if ./build.sh configures CMake, which it, by default, will not do if a build folder (with a CMakeCache.txt) exists. To enable xls if you already have a local build, you can either force a reconfigure of all projects by providing the --force flag, or delete the Dynamatic’s CMakeCache.txt to only force a reconfigure (and costly rebuild) of Dynamatic:

./build.sh --force --experimental-enable-xls

# OR

rm build/CMakeCache.txt

./build.sh --experimental-enable-xls

Once enabled, you do not need to provide ./build.sh with --experimental-enable-xls to re-build.

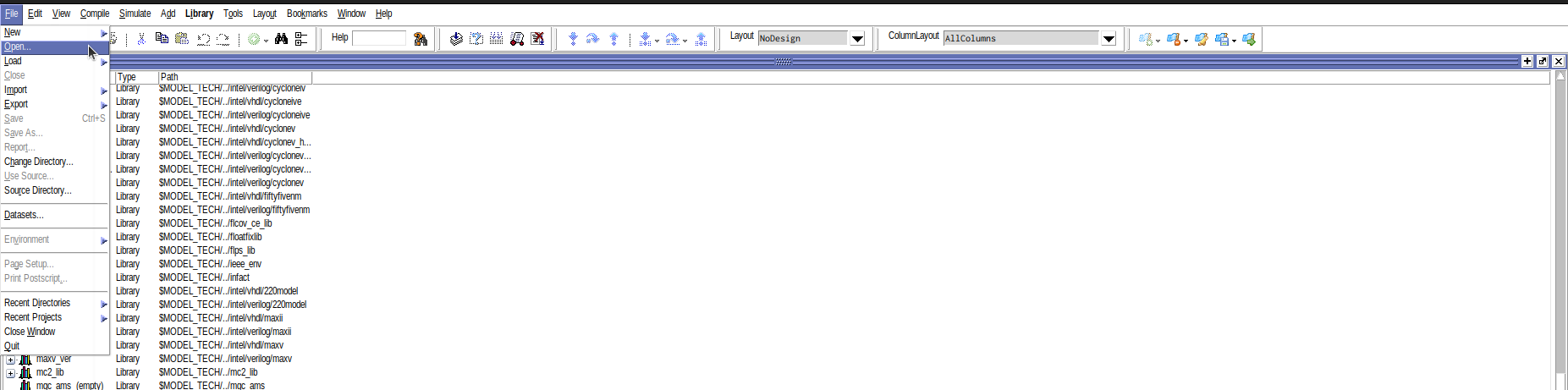

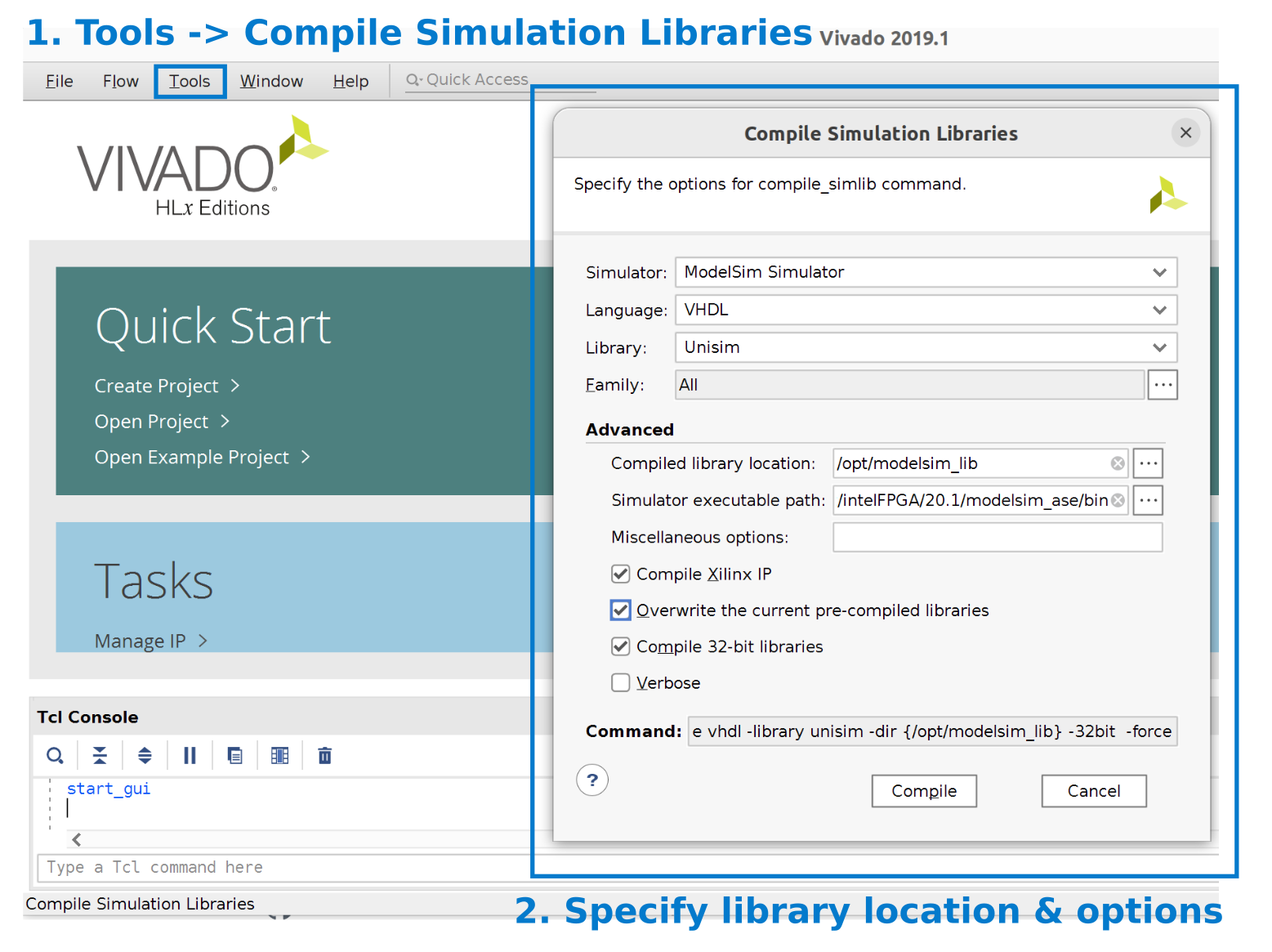

6. Modelsim/Questa Installation

Dynamatic uses Modelsim (has 32 bit dependencies) or Questa (64 bit simulator) to run simulations, thus you need to install it before hand. Download Modelsim or Questa, install it (in a directory with no special access permissions) and add it to path for Dynamatic to be able to run it. Add the following lines to the .bashrc file in your home directory to add modelsim to path variables.

note

Ensure you write the full path

export MODELSIM_HOME=/path/to/modelsim # path will look like /home/username/intelFPGA/20.1/modelsim_ase

export PATH="$MODELSIM_HOME/bin:$PATH" # (adjust the path accordingly)

or

export MODELSIM_HOME=/path/to/questa # path will look like home/username/altera/24.1std/questa_fse/

export PATH="$MODELSIM_HOME/bin:$PATH"

In any terminal, source .bashrc file and run the vsim command to verify that modelsim was added to path properly and runs.

source ~/.bashrc

vsim

If you encounter any issue related to libXext (if you installed Modelsim) you may need to install a few more libraries to enable the 32 bit architecture which supports packages needed by Modelsim:

sudo dpkg -add-architecture i386

sudo apt update

sudo apt install libxext6:i386 libxft2:i386 libxrender1:i386

If you are using Questa, running vsim will give you an error relating to the absence of a license.

To obtain a license (free or paid):

- Create an account on Intel’s Self Servicing License Center page. The page has detailed instructions on how to obtain a license.

- Request for a license. You will receive an authorization email with instructions on setting up a fixed or floating license (a fixed license suffices). This could take some minutes or up to a few hours.

- Download the license file and add it to path as shown below

#Questa license set up

export LM_LICENSE_FILE=/path/to/license/file # looks like this "home/username/.../LR-240645_License.dat:$LM_LICENSE_FILE"

export MGLS_LICENSE_FILE=/path/to/license/file # looks like this "/home/beta-tester/Downloads/LR-240645_License.dat"

export SALT_LICENSE_SERVER=/path/to/license/file # looks like this "/home/beta-tester/Downloads/LR-240645_License.dat"

note

You may need only one of the three lines above based on the version of Questa you are using. Refer to the release notes for the version you have installed. Having the three lines poses no issue nonetheless.

Analyzing Output Files

Dynamatic stores the compiled IR, generated RTL, simulation results, and useful intermediate data in the out/ directory.

Learning about these files is essential for identifying performance bottlenecks, gaining deeper insight into the generated circuits, exporting the generated design to integrate into your existing designs, etc.

This document provides guidance on the locations of these files and how to analyze them effectively.

Compilation Results

note

Compilation results are not essential for a user but can help in debugging. This requires some knowledge of MLIR.

- The

compilecommand creates anout/compdirectory that stores all the intermediate files as described in the Dynamatic HLS flow in the developer guide. - A file is created for every step of the compilation process, allowing the user to inspect relevant files if any unexpected behaviour results.

tip